Hyper-V over SMB – Performance considerations

1. Introduction

If you follow this blog, you probably already had a chance to review the “Hyper-V over SMB” overview talk that I delivered at TechEd 2012 and other conferences. I am now working on a new version of that talk that still covers the basics, but adds brand new segments focused on end-to-end performance and detailed sample configurations. This post looks at this new end-to-end performance portion.

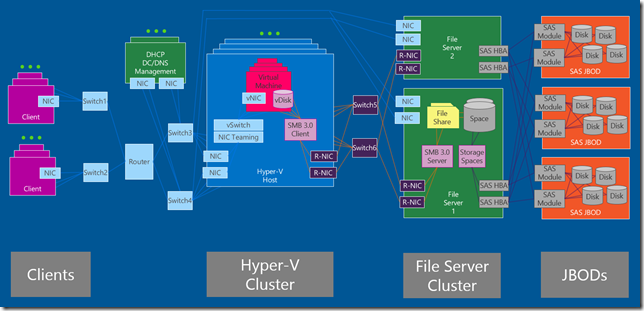

2. Typical Hyper-V over SMB configuration

End-to-end performance starts by drawing an end-to-end configuration. The diagram below shows a typical Hyper-V over SMB configuration including:

- Clients that access virtual machines

- Nodes in a Hyper-V Cluster

- Nodes in a File Server Cluster

- SAS JBODs acting as shared storage for the File Server Cluster

The main highlights of the diagram above include the redundancy in all layers and the different types of network connecting the layers.

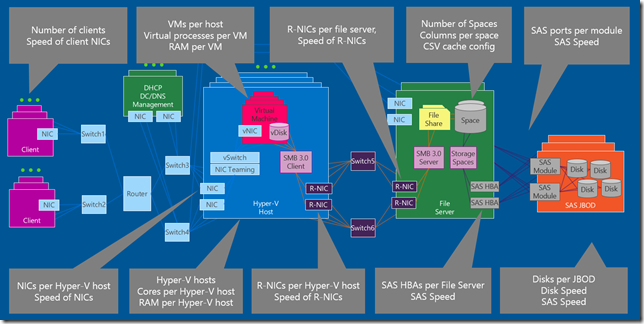

3. Performance considerations

With the above configuration in mind, you can then start to consider the many different options at each layer that can affect the end-to-end performance of the solution. The diagram below highlights a few of the items, in the different layers, that would have a significant impact.

These items include, in each layer:

- Clients

- Number of clients

- Speed of the client NICs

- Virtual Machines

- VMs per host

- Virtual processors and RAM per VM

- Hyper-V Hosts

- Number of Hyper-V hosts

- Cores and RAM per Hyper-V host

- NICs per Hyper-V host (connecting to clients) and the speed of those NICs

- RDMA NICs (R-NICs) per Hyper-V host (connecting to file servers) and the speed of those NICs

- File Servers

- Number of File Servers (typically 2)

- RAM per File Server, plus how much is used for CSV caching

- Storage Spaces configuration, including number of spaces, resiliency settings and number of columns per space

- RDMA NICs (R-NICs) per File Server (connecting to Hyper-V hosts) and the speed of those NICs

- SAS HBAs per File Server (connecting to the JBODs) and speed of those HBAs

- JBODs

- SAS ports per module and the speed of those ports

- Disks per JBOD, plus the speed of the disks and of their SAS connections

It’s also important to note that the goal is not to achieve the highest performance possible, but to find a balanced configuration that delivers the performance required by the workload at the best possible cost.

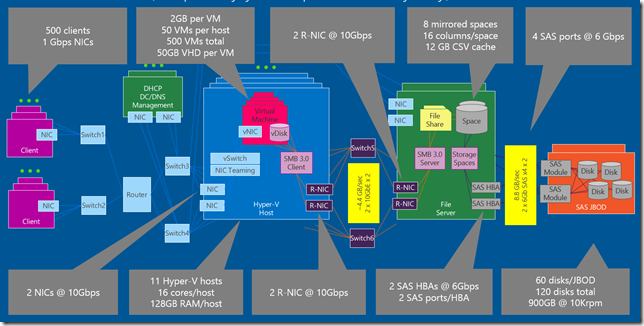

4. Sample configuration

To make things a bit more concrete, you can look at a sample VDI workload. Suppose you need to create a solution to host 500 VDI VMs. Here is an outline of the thought process you would have to go through:

- Workload, disks, JBODs, hosts

- Start with the stated workload requirements: 500 VDI VMs, 2GB RAM, 1 virtual processor, ~50GB per VM, ~30 IOPS per VM, ~64KB per IO

- Next, select the type of disk to use: 900 GB HDD at 10,000 rpm, around 140 IOPS

- Also, the type of JBOD to use: SAS JBOD with dual SAS modules, two 4-lane 6Gbps port per module, up to 60 disks per JBOD

- Finally, this is the agreed upon spec for the Hyper-V host: 16 cores, 128GB RAM

- Storage

- Number of disks required based on IOPS: 30 * 500 /140 = ~107 disks

- Number of disks required based on capacity: 50GB * 2 * 500 / 900 = ~56 disks.

- Some additional capacity is required for snapshots and backups.

- It seems like we need 107 disks for IOPS to fulfill both the IOPS and capacity requirements

- We can then conclude we need 2 JBODs with 60 disks each (that would give us 120 disks, including some spares)

- Hyper-V hosts

- 2 GB VM / 128GB = ~ 50 VM/host – leaving some RAM for host

- 50 VMs * 1 virtual procs / 16 cores = ~ 3:1 ratio between virtual and physical processors.

- 500 VMs / 50 = ~ 10 hosts – We could use 11 hosts, filling all the requirements plus one as spare

- Networking

- 500 VMs*30 IOPS*64KB = 937 MBps required – This works well with a single 10GbE which can deliver 1100 MBps . 2 for fault tolerance.

- Single 4-lane SAS at 6Gbps delivers 2200 MBps. 2 for fault tolerance. You could actually use 3Gbps SAS HBAs here if you wanted.

- File Server

- 500 * 25 IOPS = 12,500 IOPS. Single file server can deliver that without any problem. 2 for fault tolerance.

- RAM = 64GB, good size that allows for some CSV caching (up to 20% of RAM)

Please note that this is simply as an example, since your specific workload requirements may vary. There’s no general industry agreement on exactly what a VDI workload looks like, which kind of disks should be used with it or how much RAM would work best for the Hyper-V hosts in this scenario. So, take this example with a grain of salt :-)

Obviously you could have decided to go with a different type of disk, JBOD or host. In general, higher-end equipment will handle more load, but will be more expensive. For disks, deciding factors will include price, performance, capacity and endurance. Comparing SSDs and HDDs, for instance, is an interesting exercise and that equation changes constantly as new models become available and prices fluctuate. You might need to repeat the above exercise a few times with different options to find the ideal solution for your specific workload. You might want to calculate your cost per VM for each specific iteration.

Assuming you did all that and liked the results, let’s draw it out:

Now it’s up to you to work out the specific details of your own workload and hardware options.

5. Configuration Variations

It’s also important to notice that there are several potential configuration variations for the Hyper-V over SMB scenario, including:

- Using a regular Ethernet NICs instead of RDMA NICs between the Hyper-V hosts and the File Servers

- Using a third-party SMB 3.0 NAS instead of a Windows File Server

- Using Fibre Channel or iSCSI instead of SAS, along with a traditional SAN instead of JBODs and Storage Spaces

6. Speeds and feeds

In order to make some of the calculations, you might need to understand the maximum theoretical throughput of the interfaces involved. For instance, it helps to know that a 10GbE NIC cannot deliver up more than 1.1 GBytes per second or that a single SAS HBA sitting on an 8-lane PCIe Gen2 slot cannot deliver more than 3.4 GBytes per second. Here are some tables to help out with that portion:

| NIC | Throughput |

| 1Gb Ethernet | ~0.1 GB/sec |

| 10Gb Ethernet | ~1.1 GB/sec |

| 40Gb Ethernet | ~4.5 GB/sec |

| 32Gb InfiniBand (QDR) | ~3.8 GB/sec |

| 56Gb InfiniBand (FDR) | ~6.5 GB/sec |

| HBA | Throughput |

| 3Gb SAS x4 | ~1.1 GB/sec |

| 6Gb SAS x4 | ~2.2 GB/sec |

| 4Gb FC | ~0.4 GB/sec |

| 8Gb FC | ~0.8 GB/sec |

| 16Gb FC | ~1.5 GB/sec |

| Bus Slot | Throughput |

| PCIe Gen2 x4 | ~1.7 GB/sec |

| PCIe Gen2 x8 | ~3.4 GB/sec |

| PCIe Gen2 x16 | ~6.8 GB/sec |

| PCIe Gen3 x4 | ~3.3 GB/sec |

| PCIe Gen3 x8 | ~6.7 GB/sec |

| PCIe Gen3 x16 | ~13.5 GB/sec |

| Intel QPI | Throughput |

| 4.8 GT/s | ~9.8 GB/sec |

| 5.86 GT/s | ~12.0 GB/sec |

| 6.4 GT/s | ~13.0 GB/sec |

| 7.2 GT/s | ~14.7 GB/sec |

| 8.0 GT/s | ~16.4 GB/sec |

| Memory | Throughput |

| DDR2-400 (PC2-3200) | ~3.4 GB/sec |

| DDR2-667 (PC2-5300) | ~5.7 GB/sec |

| DDR2-1066 (PC2-8500) | ~9.1 GB/sec |

| DDR3-800 (PC3-6400) | ~6.8 GB/sec |

| DDR3-1333 (PC3-10600) | ~11.4 GB/sec |

| DDR3-1600 (PC3-12800) | ~13.7 GB/sec |

| DDR3-2133 (PC3-17000) | ~18.3 GB/sec |

Also, here is some fine print on those tables:

- Only a few common configurations listed.

- All numbers are rough approximations.

- Actual throughput in real life will be lower than these theoretical maximums.

- Numbers provided are for one way traffic only (you should double for full duplex).

- Numbers are for one interface and one port only.

- Numbers use base 10 (1 GB/sec = 1,000,000,000 bytes per second)

7. Conclusion

I’m still working out the details of this new Hyper-V over SMB presentation, but this posts summarizes the portion related to end-to-end performance.

I plan to deliver this talk to an internal Microsoft audience this week and also during the MVP Summit later this month. I am also considering submissions for MMS 2013 and TechEd 2013.

P.S.: You can get a preview of this portion of the talk by watching this recent TechNet Radio show I recorded with Bob Hunt: Hyper-V over SMB 3.0 Performance Considerations.

P.P.S: This content was delivered as part of my TechEd 2013 North America talk: Understanding the Hyper-V over SMB Scenario, Configurations, and End-to-End Performance