Windows Server 2012 and SMB 3.0 demos at TechEd showcase File Server with multiple storage configurations and technologies

1. Introduction

We recently had our TechEd North America event and all sessìon slides and recordings are now publicly available (links are provided below). We’re also getting ready to deliver our TechEd Europe talks this week. With hundreds of talks to attend and/or review, it will probably be a few weeks or maybe months before you can watch them all. That’s why I wanted to call your attention to a few sessions if you are interested specifically in the new SMB 3.0 file server in Windows Server 2012.

My focus here is on a set of new capabilities in Windows Server 2012 which work great with a new crop of server hardware, like the new Cluster-in-a-Box (CiB) systems, the latest server CPUs, PCIe Gen3 slots, RDMA network interfaces, Clustered RAID controllers, SAS HBAs, shared SAS JBODs and enterprise-class SSDs, just to name a few. Not that you always need to use every single one of those with every single File Server configuration, but they do make for interesting head-turning solutions.

2. Configurations

In this blog post, I will describe seven of the demo configurations (used either by session demos or partner booth demos) that were especially interesting at the event. I want to apologize in advance if I overlooked a specific session or a partner demo, which I am bound to do, since there was so much goodness in the event. These are also not listed in any particular order.

One of the most impressive characteristics of this set of demos is the variety of technologies used by the partners. We see different types of storage solutions (Non-clustered directly-attached storage, Clustered Storage Spaces, Clustered RAID, FC SAN), different types of networking (traditional Ethernet, Ethernet with RDMA, InfiniBand), different types of disk (performance HDDs, capacity HDDs, Enterprise SSDs, custom Flash), different enclosures (Cluster-in-a-Box in different sizes, discrete servers) and different file server configurations (Non-clustered, Classic Clusters, Scale-out Clusters).

Here is the list of configurations described in this blog post:

- The Quanta CiB

- The Wistron CiB

- The HP CiB

- The Violin Memory CiB

- The SuperMicro/Mellanox Setup

- The Chelsio Setup

- The X-IO Setup

Below are the details on each of the configurations, with links to the Tech Ed sessions, partner press releases and a few screenshots taken directly from the session recordings. Please note that the pictures here are in reduced size to fit the blog format, but you can get the full PowerPoint decks and high quality video recordings from the links provided.

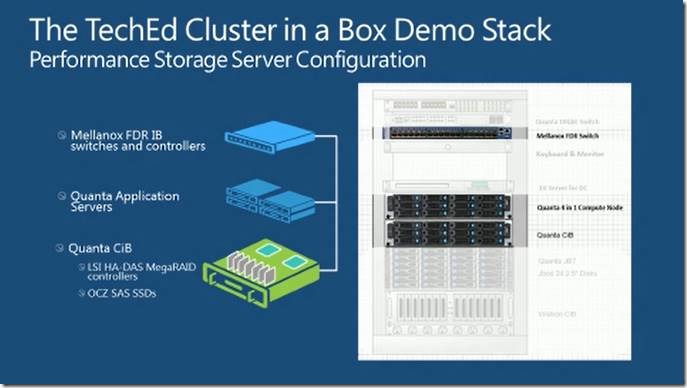

2.1. The Quanta CiB

- Server: Quanta CiB node, Romley motherboard, dual Intel CPUs

- Storage: LSI HA-DAS, OCZ Talos 2 R Enterprise SSDs

- Networking: Mellanox 54Gbps InfiniBand FDR NICs, Mellanox InfiniBand FDR Switch

- File Server configuration: Clustered File Server, Classic

- Showcased at: Session VIR306 - Hyper-V over SMB: Remote File Storage Support in Windows Server 2012 Hyper-V (demo starts at 29:30)

- Showcased at: Session WSV310 - Windows Server 2012: Cluster-in-a-Box, RDMA, and More (mentioned at 22:00)

- Showcased at: Session WSV314 - Windows Server 2012 NIC Teaming and SMB Multichannel Solutions (demo starts at 1:02:00)

- Showcased at: Partner Pavilion

- Partner Press Release: LSI Now Sampling to Server Manufacturers High-Availability Direct-Attached Storage Solutions for Windows Server Platforms

Figure 2.1a: WSV310 describes the Quanta CiB setup in the Technical Learning Center

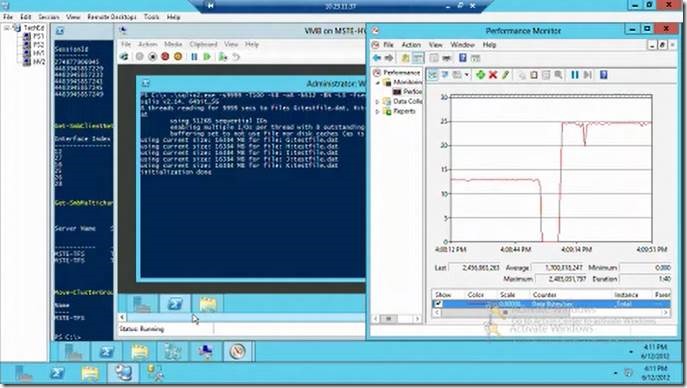

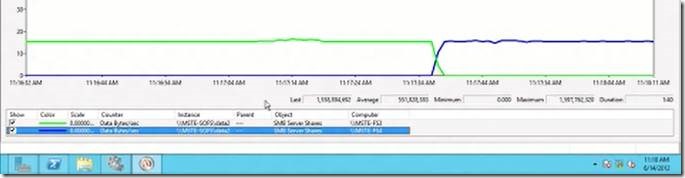

Figure 2.1b: VIR306 shows SMB Transparent Failover happens in just a few seconds with a Classic File Server cluster, while a VM is running a busy workload

Figure 2.1c: VIR314 shows SMB Multichannel network fault tolerance with dual RDMA ports. Single 54Gbps port can handle 2.5 Gbytes/sec just fine.

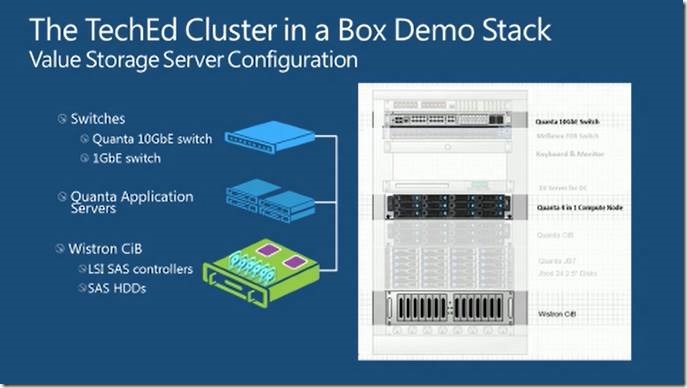

2.2. The Wistron CiB

- Server: Wistron CiB node, Romley motherboard, dual Intel CPUs

- Storage: LSI SAS HBA, 24 15Krpm Fujitsu performance HDDs

- Networking: Intel X-520 dual-ported 10GbE NICs

- File Server configuration: Clustered File Server, Scale-Out

- Showcased at: Session WSV310 - Windows Server 2012: Cluster-in-a-Box, RDMA, and More (mentioned at 24:05)

- Showcased at: Session WSV334 - Windows Server 2012 File and Storage Services Management (demo starts at 13:00) (TechEd North America only)

- Showcased at: Session WSV410 - Continuously Available File Server: Under the Hood (demo starts at 46:00) (TechEd North America only)

- Showcased at: Session WSV04-LNC - Windows Server 2012 Storage for your Private Cloud (TechEd Europe only)

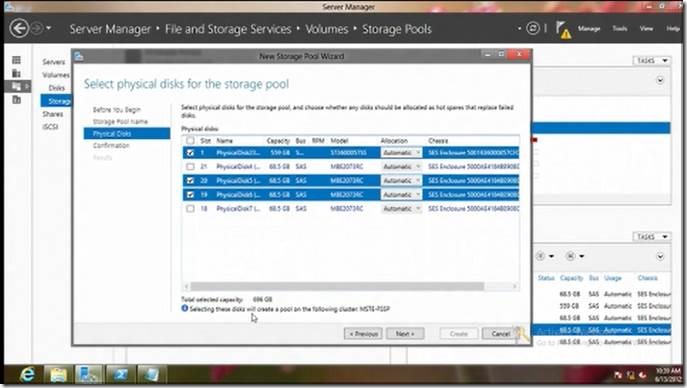

Figure 2.2a: WSV310 describes the Wistron CiB setup in the Technical Learning Center

Figure 2.2b: WSV334 shows how to use Server Manager to create a pool using Storage Spaces

Figure 2.2c: WSV410 shows a client moving between cluster nodes in less than a second with a Scale-Out File Server configuration.

2.3. The HP CiB

- Server: HP X5000 G2 CiB node, dual Intel CPUs

- Storage: HP Cascade controller, 36 SFF performance HDDs or 8 LFF capacity HDDs

- Networking: 10GbE NIC

- File Server configuration: Clustered File Server

- Showcased at: Session WSV303 - Windows Server 2012 High-Performance, Highly-Available Storage Using SMB (mentioned at 47:20) (TechEd North America only)

- Showcased at: Session WSV310 - Windows Server 2012: Cluster-in-a-Box, RDMA, and More (mentioned at 18:16)

- Showcased at: Partner Pavilion

2.4. The Violin Memory CiB

- Server: Violin Memory CiB node, Nehalem motherboard, dual Intel CPUs

- Storage: Custom all-flash storage. SLC flash in 256GB units. 64 total units: 48 data, 12 parity, 4 hotspare..

- Networking: Mellanox 54Gbps InfiniBand FDR NICs, Mellanox InfiniBand FDR Switch

- File Server configuration: Clustered File Server, Classic

- Showcased at: Session WSV303 - Windows Server 2012 High-Performance, Highly-Available Storage Using SMB (demo starts at 19:45) (TechEd North America only)

- Showcased at: Session WSV330 - How to Increase SQL Availability and Performance Using Window Server 2012 SMB 3.0 Solutions (demo starts at 48:45) (TechEd North America only)

- Showcased in Channel 9 video: Continuous Availability in Windows Server 2012 with Gene Chellis & Claus Joergensen (demo starts at 08:00)

- Showcased at: Partner Pavilion

- Partner Press Release: Violin Memory Showcases the World’s First Microsoft Windows All-Flash Cluster-in-a-Box Storage Platform

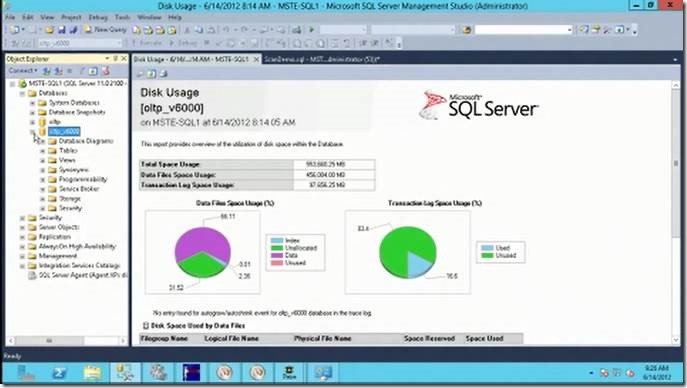

Figure 2.4a: WSV303 show the SQL Server configuration using the Violin Memory CiB over SMB 3.0

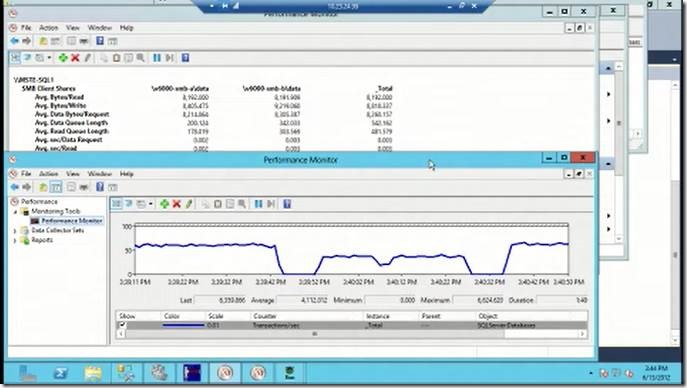

Figure 2.4b: WSV330 shows SQL Server over SMB switching between 10GbE and InfiniBand with a running workload to achieve over 4.5Gbytes/sec

Figure 2.4c: WSV330 shows a SQL Server OLTP workload at ~5K SQL transactions/sec (~200K IOPS) surviving a file server node maintenance scenario.

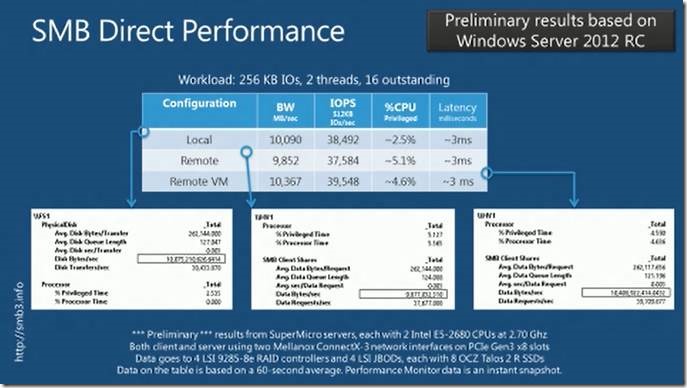

2.5. The SuperMicro/Mellanox Setup

- Server: SuperMicro Server with a Romley motherboard, dual Intel CPUs

- Storage: 4 LSI MegaRAID controllers, 4 SuperMicro JBODs, OCZ Talos 2 R Enterprise SSDs

- Networking: Mellanox 54Gbps InfiniBand NICs

- File Server configuration: Non-clustered File Server

- Showcased at: Session WSV310 - Windows Server 2012: Cluster-in-a-Box, RDMA, and More (demo starts at 39:45)

- Showcased at: Partner Pavilion

- Partner Press Release: Mellanox FDR 56Gb/s InfiniBand Achieves Record Performance in Virtualization Running Windows Server 2012 Hyper-V

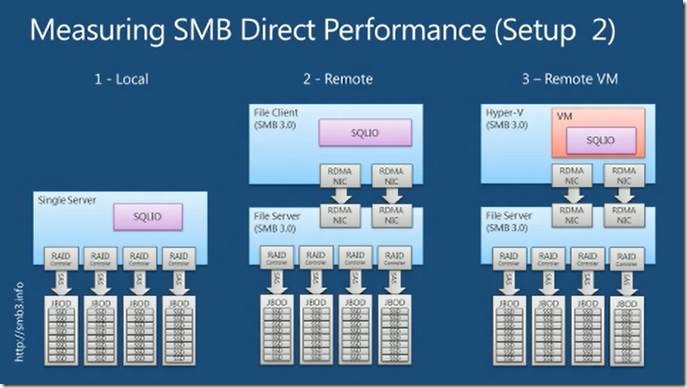

Figure 2.5a: WSV310 describes the setup, showing that we have numbers for local, remote and remote VM performance.

Figure 2.5b: WSV310 compares results from the different runs, with a single remote VM achieving over 10Gbytes/sec throughput.

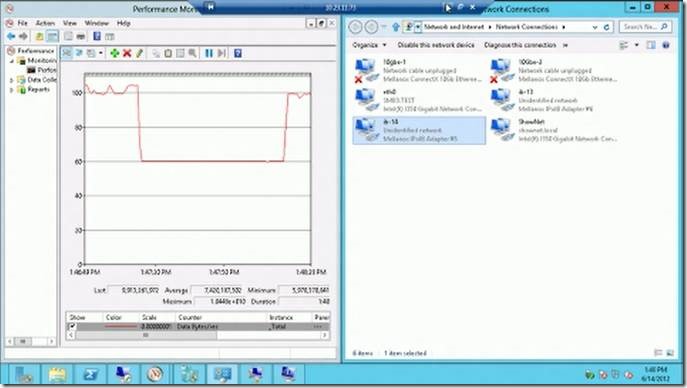

Figure 2.5c: WSV310 shows how SMB 3.0 survives the loss of one of the InfiniBand NICs (dropping to 6Gbytes/sec) and picks right back up to 10Gbytes/sec when the NIC is back.

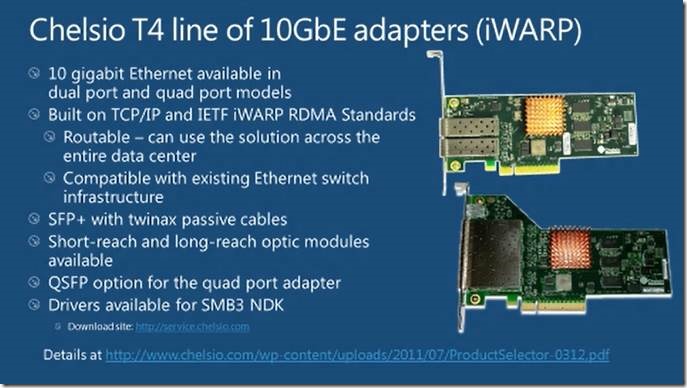

2.6. The Chelsio Setup

- Server: Romley motherboard, dual Intel CPUs

- Storage: 3 PCIe SSDs

- Networking: Quad-port 10GbE Chelsio T4 iWARP NIC

- File Server configuration: Non-clustered File Server

- Showcased at: Session WSV310 - Windows Server 2012: Cluster-in-a-Box, RDMA, and More (mentioned at 38:55)

- Showcased at: Partner Pavilion

- Partner Press Release: Chelsio Demonstrates Three Gigabyte Per Second Performance With SMB Direct Over Ethernet on Windows Server 2012

- Partner Technical Brief: Delivering superior performance with SMB Direct over Ethernet on Windows Server 2012

Figure 2.6: WSV310 announces a new RDMA partner for Windows Server 2012

2.7. The X-IO Setup

- Server: 3 HP Servers with Romley motherboards, dual Intel CPUs (accessed by a single client)

- Storage: Qlogic FC HBAs, 10 X-IO ISE-2 Storage Systems

- Networking: Multiple Mellanox 54Gbps InfiniBand FDR NICs, Mellanox Infiniband FDR Switch

- File Server configuration: Non-clustered File Servers

- Showcased at: Partner Pavilion (TechEd North America only)

- Partner Press Release: X-IO Exceeds 15 Gigabytes of Throughput in Demonstration Using Windows Server 2012 RC and a Single Rack of Equipment

Figure 2.7: X-IO demo showcasing 15Gbytes/sec throughput from a single SMB 3.0 client going to 3 non-clustered File Server servers then to a single X-IO rack.

3. Conclusion

There 7 configurations above are just a sample of what’s possible and there are many more potential combinations of all these interesting technologies. We also disclosed (as part of session WSV310) the list of partners below, which includes additional Cluster-in-a-Box solutions coming from other partners. They are all working to deliver amazing new ways to provide SMB 3.0 remote file storage with Windows Server 2012.

Figure 3: WSV310 shares a list of partners working on CiB solutions.If you’re attending TechEd Europe this week, you will have a great chance to see some of these configurations, partners and sessions. Be sure to check the list of TechEd Europe sessions related to the Windows Server 2012 File Server I published in a previous blog post.

While I am showcasing these CiB system as File Server clusters here (since I am a PM in the File Server team), I would like to stress that these boxes are also excellent for running other roles like Hyper-V clusters and SQL Server clusters. Obviously, the TechEd event covers many of these other roles and workloads and I encourage you to check out these talks as well (after you’re done with the File Server sessions :-).