Preliminary performance results with Windows Server 2012 Beta and SMB Direct (SMB over RDMA)

If you’re reading up on Windows Server 2012, you probably saw that using file storage for your Hyper-V and SQL Server data is one of our key new scenarios. You can read more about this in the TechNet article called “High-Performance, Continuously Available File Share Storage for Server Applications” at https://technet.microsoft.com/en-us/library/hh831399.aspx. We have already shared some preliminary data on the improved performance of the Windows Server 2012 file server and this post provides an update on those numbers.

Windows Server 2012 Developer Preview - September 2011

Back in September of 2011, we released a white paper providing a preliminary analysis of the performance of Windows Server 2012 SMB with four 10GbE adapters. This paper focused on the SMB Multichannel feature using the Windows Developer Preview. You can find it at https://msdn.microsoft.com/en-us/library/windows/hardware/hh457617. This paper shows the use of four 10GbE adapters combined via SMB Multichannel to achieve a maximum throughput of 4300 MB/sec and over 300,000 1KB IOPs.

At that time, we also shared performance data as part of the multiple presentations at the //build conference and SNIA’s Storage Developers Conference (SDC 2011). You can find details on those presentations at https://blogs.technet.com/b/josebda/archive/2011/11/27/links-to-build-sessions-on-storage-networking-and-hyper-v.aspx and https://blogs.technet.com/b/josebda/archive/2011/12/16/snia-s-storage-developer-conference-sdc-2011-content-slides-and-videos-now-available-for-download-including-smb-2-2-details.aspx. The SDC presentation on SMB Direct included details like delivering 160,000 1KB IOPs using a Mellanox ConnectX-2 QDR InfiniBand card.

Windows Server 2012 Beta – February 2012

Details on the Windows Server 2012 Beta release and its new SMB capabilities were recently posted on the Windows Server blog at https://blogs.technet.com/b/windowsserver/archive/2012/03/15/windows-server-8-taking-server-application-storage-to-windows-file-shares.aspx.

With that, many partners and customers have been asking us about the performance for File Servers and the new SMB with this new pre-release version, especially with SMB Direct. The team is hard at work testing and producing additional white papers, but we don’t have any documents planned for release this month.

In the meantime, since I’m delivering a presentation at SNW Spring and sharing some new numbers, I thought of using this blog post to share some limited performance results for a specific configuration, so we can show some of the progress we made since last September.

Windows Server 2012 Beta results – SMB Direct IOPs, bandwidth and latency

These results come from a couple of servers that play the role of SMB Server and SMB client. The client in this case was a typical server-class computers using an Intel Westmere motherboard with two Intel Xeon L5630 processors (2 sockets, 4 cores each, 2.10 GHz). For networking, it was equipped with a single RDMA-capable Mellanox ConnectX-2 QDR InfiniBand card sitting on a PCIe Gen2 x8 slot. For these tests, the IOs went all the way to persistent storage (using 14 SSDs). We tested three IO sizes: 512KB, 8KB and 1KB, all reads.

Workload |

IO Size |

IOPS |

Bandwidth |

Latency |

Large IOs, high throughput (SQL Server DW) |

512 KB |

4,210 |

2.21GB/s |

4.41ms |

Small IOs, typical application server (SQL Server OLTP) |

8 KB |

214,000 |

1.75GB/s |

870µs |

Very small IOs (high IOPs) |

1 KB |

294,000 |

0.30GB/s |

305µs |

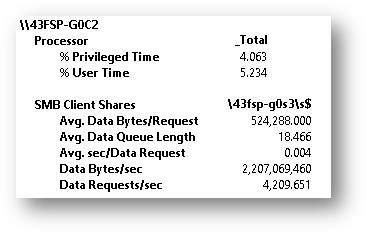

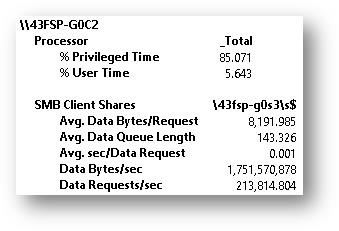

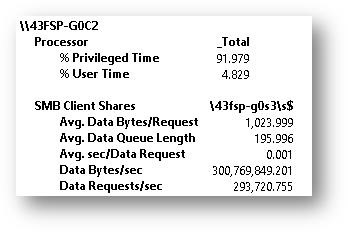

Here are some snapshots of the performance counters for the SMB client computer while the tests were running:

While doing large IOs (512KB, typical in data warehousing workloads), you can see good throughput with low latency. You can also see a healthy number of 8 KB IOs (common in several workloads, including online transaction processing) with even lower latency. The third line uses 1KB reads which is not a typical workload, but is commonly used to showcase high IOPs.

Please keep in mind that the results above use a single network interface. Using SMB Multichannel, we can use several interfaces at once to achieve better results, as we did in the white paper that covered four non-RDMA 10GbE cards, mentioned at the beginning of this post.

Note that the latency value on the table maps to the “Average seconds per data request” performance counter shown in the screenshots. This essentially measures the time it takes to fulfill the IO from the SMB client perspective, including the round trip time to the SMB server and the time it takes to complete the IO to disk on the SMB server side.

Even better results on the horizon

The SMB team is still working on performance tuning the software, so the work is not quite done yet. The performance team is also hard at work using the latest generation of motherboards and CPUs. For instance, we just got a hold of new PCIe Gen 3 cards for both storage and networking, including the latest InfiniBand cards that can transfer 54Gbps. They are looking into results for systems using multiple RDMA cards at once with SMB Multichannel. We’re not quite ready to release the results of those tests yet, but the numbers are better :-)

P.S.: Even better results became available after this post. Read more at https://blogs.technet.com/b/josebda/archive/2012/05/06/windows-server-2012-beta-with-smb-3-0-demo-at-interop-shows-smb-direct-at-5-8-gbytes-sec-over-mellanox-connectx-3-network-adapters.aspx