Cookdown example - between two or more workflows

Thought I would do something useful while sitting on the airplane today and write up a cookdown example expanding from my last post. This will be as straight forward as possible with mostly just screenshots of the steps I took to share a data source between more than one monitoring workflow.

In this example, I’ll show how to share a data source between a monitor and a performance collection rule, as depicted in this workflow. The scenario is to count files in a directory. The monitor will change state and optionally generate an alert if the number of files exceed the specified threshold, and the collection rule will simply collect the number of files as performance data to be used in views and/or reports.

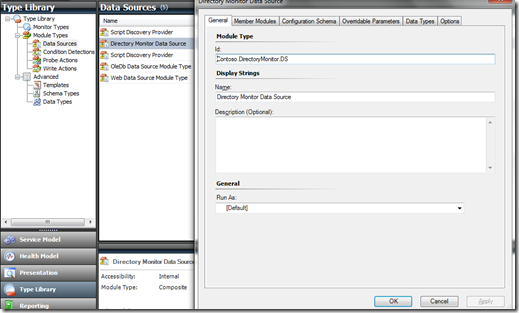

Open the Authoring Console and create a new MP. Create a new data source in the type library.

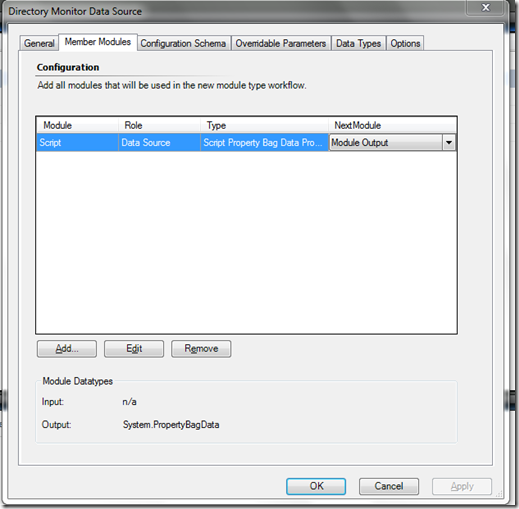

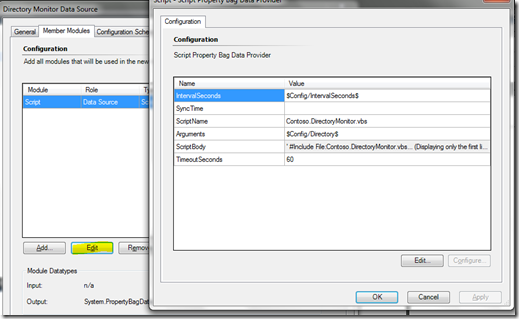

Add a script property bag data provider module.

Pass in configuration elements using the $Config variable notation.

Variables passed into the module are $Config/IntervalSeconds$ and $Config/Directory$ .

SyncTime is optional and not used in this example. Enter the script name with the VBS extension, and use a TimeoutSeconds of 60.

It’s okay to use a $Config element for timeout seconds, but in this example I hard-coded it. The only reason I hard-coded it here is to reduce the likelihood of the MP author to break cookdown while authoring any monitors and rules that use this data source. The thing to remember about cookdown is the configuration passed through the data source needs to be the same between all workflows that access it, otherwise cookdown will not be performed.

Script body – just edit and remember to enter the cdata tag:

<![CDATA[script goes here]]>

If you don’t plug in the CDATA tag, any operators in the script will not be processed correctly. With the CDATA tag, you can simply paste your script and the AC will handle operator conversions by adding ampersands so the MP XML can be read by Operations Manager.

Here is the sample script you can paste into the XML:

<![CDATA[

' Create OpsMgr property bag object

Dim oAPI, oBag

Set oAPI = CreateObject("MOM.ScriptAPI")

Set oBag = oAPI.CreatePropertyBag()

' -----------------------------------------Script logic start

' Declare variables

Dim oArgs, sDirectory

Set oArgs = WScript.Arguments

sDirectory = oArgs(0)

'Setup filesystem objects

Dim oFso,oFolder,iCount

Set oFso = CreateObject("Scripting.FileSystemObject")

if (oFso.FolderExists(sDirectory)) Then

set oFolder = oFso.GetFolder(sDirectory)

'Count files in current directory

iCount = cint(oFolder.Files.Count)

' -----------------------------------------Script logic end

Call oBag.AddValue("Directory", sDirectory)

Call oBag.AddValue("Count", iCount)

else

' -----------------------------------------May be used to catch errors for other workflow

Call oAPI.LogScriptEvent("Contoso.FileCount.vbs",511,0,"Directory " & sDirectory & " does not exist.")

end if

Call oAPI.Return(oBag)

Call oAPI.LogScriptEvent("Contoso.FileCount.vbs",511,0,"Cookdown script ran.")

]]>

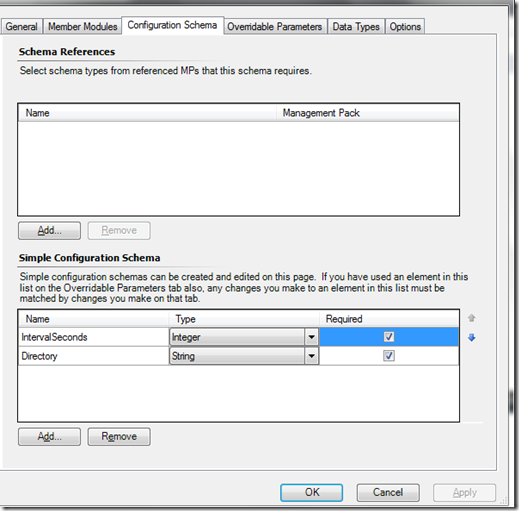

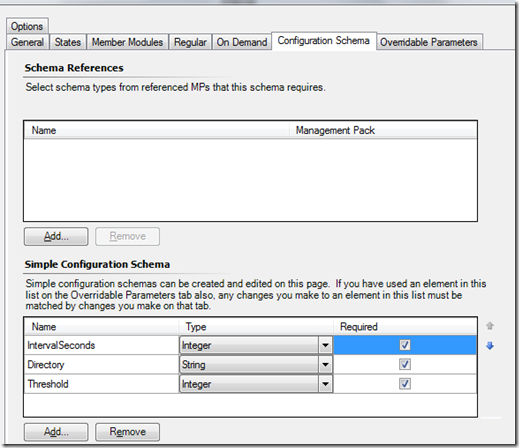

Now on the Configuration Schema tab we need to define the configuration item names and data types. Make sure the data types match up throughout your workflows.

In order for cookdown to work, be careful of the parameters you choose to allow the operator to override. At this point we will not specify overrideable parameters, as this is defined at the monitor type. Just save the new data source.

Now it’s time to create a new monitor type, as follows.

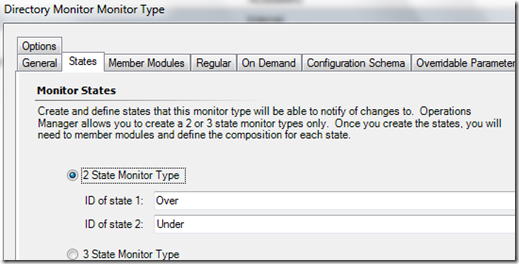

Two states, over and under.

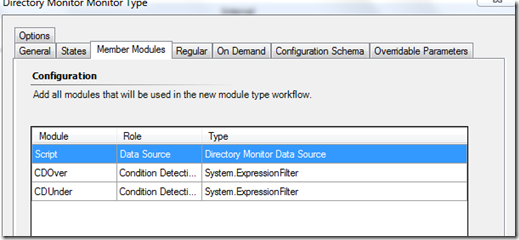

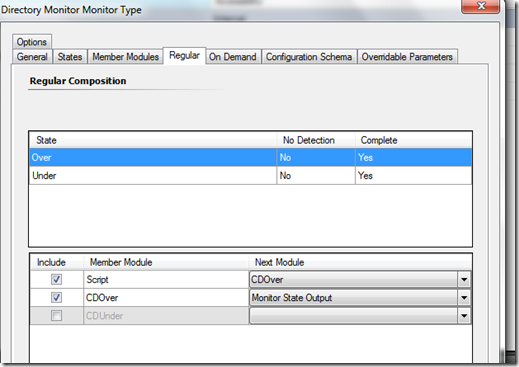

On the member modules tab, add the new data source and two expression filters to match each state (unhealthy and healthy will map to CDOver and CDUnder).

The expression filters should look like the below image for this example. Also remember to change the type to integer, as the count property bag is an integer value. The CDOver module operator = GreaterEqual, and CDUnder module operator = under.

Also notice we are passing in a new $Config element here – Threshold.

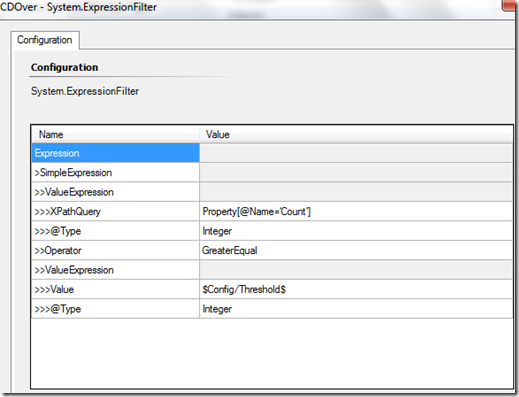

Regular tab is where we match states to the expression filters above. The over state will start with the script module, passing to the CDOver module next. Then the CDOver module will pass to monitor state output.

The Under state will be similar, but rather than ending with CDOver we will end with CDUnder.

Note: Regular tab works a little different if your workflow includes some other types of modules, like consolidator. In this case, the consolidator actually increments a number of samples exceeding the threshold, so this module would be excluded from your healthy workflow otherwise it will not work as expected.

Now we need to specify the configuration schema, much like we did on the data source. We pass in the same configuration here, but also include the new Threshold parameter.

Note: Above we have threshold parameter data type set to Integer. This is because there will always be a whole number of files counted. If we were sampling something that could potentially have a decimal, we would select Double as the data type.

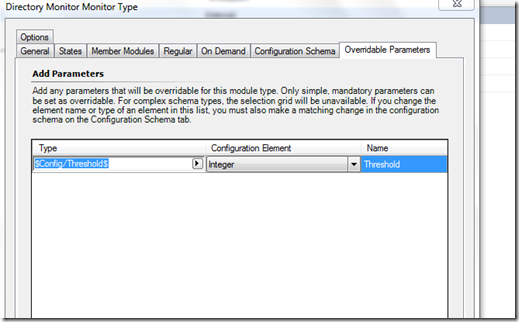

Threshold is a parameter you may want to allow the operator to override. We can see this configured on the next image in the overrideable parameters tab.

Now save the new monitor type.

One thing to keep in mind is if this were a multi-instance cookdown scenario and the operator created an override on threshold for specific instances, this would break multi-instance cookdown. This really isn’t something to worry about in this case, though, since this is not a multi-instance scenario.

However, interval and directory will be “shared” configuration in this scenario, since the end result will be a monitor and a rule. This is something the MP author will need to be aware of. If they specify an interval of 60 seconds on the monitor and 120 seconds on the rule, this will break cookdown. Also, if different directories were specified between the monitor and rule, this would break cookdown. The only way cookdown will work between the monitor and rule is if interval and directory are identical. Note that string values are case-sensitive, so specifying c:\contoso on the monitor and C:\Contoso on the rule will break cookdown.

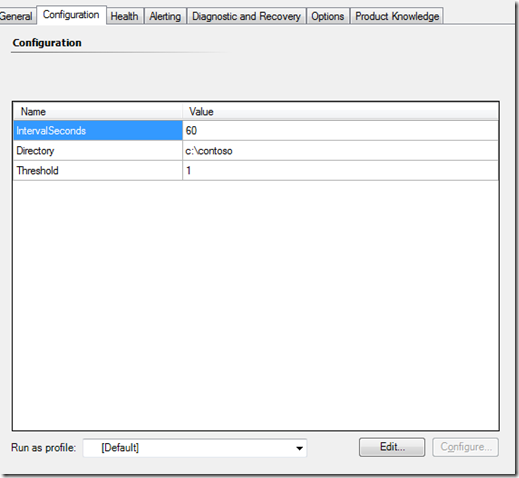

Now it’s as simple as creating a monitor with your required configuration. Create a new custom monitor and add the monitor type module you just created, and simply fill in the elements on the configuration tab. The rest is straight forward – setup the health states and alerting configuration.

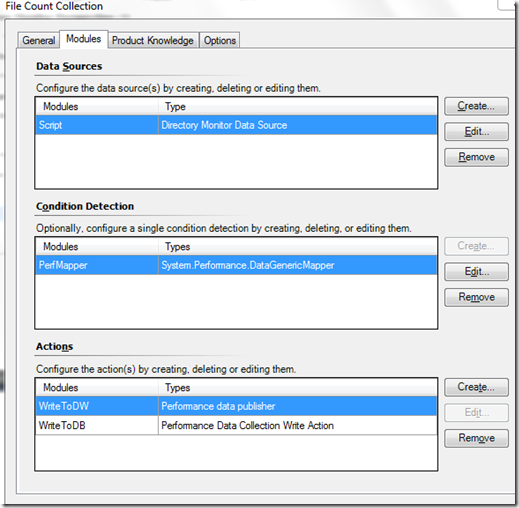

Are we cooking yet? Not quite. Now we’ll create a custom rule that uses our new script data source.

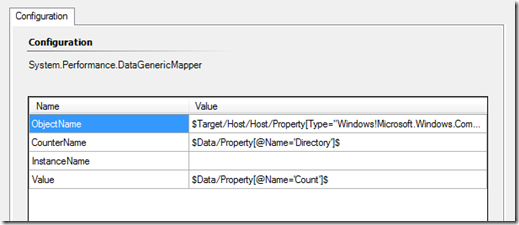

We’ll need to add a condition detection module to map the property bag data to a performance data type. This is how we’ll collect the “count” value returned by the script as performance data, and enable us to create performance views and reports. See two images below for the performance mapper configuration.

Lastly, we’ll add the database writer modules. Here I’ve added both the operational database writer and the data warehouse writer, but it’s up to you which database you want to write to. Of course, if you only care about reports, than writing to the operational database isn’t necessary and can help save space. Conversely, if you only need to see performance views in the console, you might choose to not include the data warehouse writer module.

The performance mapper module (CD above) looks similar to this.

One last thing you might want to try in your script data source is writing an event each time it executes. Sometimes you may think cookdown is working, but it’s not for some reason. This is more common in multi-instance cookdown, since it’s a little more difficult authoring this to work properly.

At the end of your script, simply include this line:

Call oAPI.LogScriptEvent("YourCookdownScript.vbs",511,0,"Cookdown script ran.")

I did already include this in the sample script above. Just remember to remove this event writer after testing.

Make sure only one event is written at each interval you expect it to run. If more than one event is written, you know cookdown is broken somewhere.

Hopefully I wasn’t too hasty in writing this article on a short flight. Feel free to ask questions.