Alert Grooming - is it working or not?

I've run across a couple instances where it appeared that alerts were not being groomed from the Operations Manager database. Part of the confusion was due to querying alerts raised per day by resolution state. A query such as this will give you a rundown of alerts generated per day, that have not yet been groomed from the OpsDB...along with their current resolution state. The purpose of this query is not to evaluate whether or not alert grooming is working.

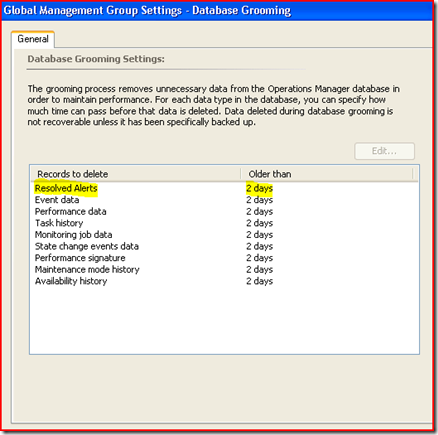

There is one important fact to keep in mind about alert grooming. Alerts raised beyond, and resolved within, your resolved alerts retention period may mislead one into thinking some alerts should have been groomed. In fact, they are just waiting for their day to come.

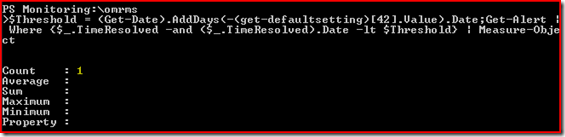

Here's a one-liner to check if alert grooming is working as designed. Just copy and paste into the Operations Manager Command Shell.

$Threshold=(Get-Date).AddDays(-(get-defaultsetting)[42].Value-1).ToUniversalTime().Date.AddMinutes(30);Get-Alert | Where {$_.TimeResolved -and $_.TimeResolved -lt $Threshold} | Measure-Object

If this command returns nothing, this is good. This means there were no objects returned...hence, grooming has done it's job. If it returns a result, this would indicate alert grooming is not working properly.

Example: Results returned one alert not groomed on it's last grooming interval.

The results in the above image indicates a problem with alert grooming. So, how do we figure out why grooming didn't do it's job? Well, the alert grooming job is not complicated. It simply selects alerts that have a resolution state = 255 and TimeResolved <> NULL. Then calculates, if TimeResolved + Resolved Alert retention days = Today...groom alert. So, if there is a problem I would first check the InternalJobHistory.

SELECT * FROM InternalJobHistory Order By InternalJobHistoryId DESC

Read more on this here.