Unable to Fail Over from one TMG node to another when using NLB in a Virtual Environment

Introduction

This post is about a scenario where TMG Administrator was trying to simulate a failover before put the environment in production. TMG nodes were installed in a third party virtual environment. TMG was using integrated NLB with Unicast, the External TMG adapter was connected to a layer 2 switch. To attempt to simulate failover, TMG Admin was disabling the NICs of one of the nodes, the unexpected result was that the other node suddenly stopped accept connections from External users.

Troubleshooting

When dealing with NLB always remember to start from the basic and here are some good references on that:

- Binding order - http://support.microsoft.com/kb/894564

- VLAN Tagging Issues http://support.microsoft.com/kb/2286940

- Connectivity Issues - http://technet.microsoft.com/en-us/library/cc783135(WS.10).aspx

- General Windows NLB Troubleshooting - http://download.microsoft.com/download/3/2/3/32386822-8fc5-4cf1-b81d-4ee136cca2c5/NLB_Troubleshooting_Guide.htm

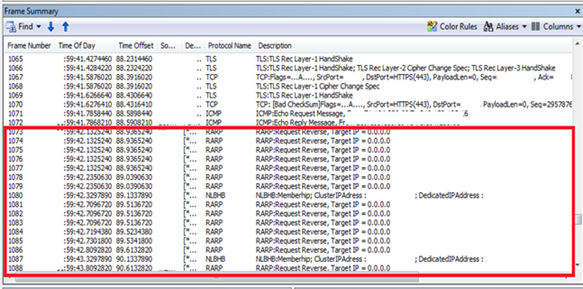

For this particular scenario data was collected on the server whose NIC was not disabled and in the traces found something very interesting:

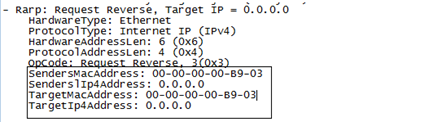

Until frame number 1072 as shown above traffic was normal after that we start seeing huge RARP traffic and this RARP traffic was little weird as we can see below target and source MAC address are same and Target IP address is 0.0.0.0.

As per definition in: //www.ietf.org/rfc/rfc903.txt we have:

This RFC suggests a method for workstations to dynamically find their protocol address (e.g., their Internet Address), when they know only their hardware address (e.g., their attached physical network address). |

Since the Target IP as seen above is 0.0.0.0, this looks strange as why would we start seeing this kind of RARP traffic the moment we disable NLB NIC of the other node.

Resolution

To fix this issue the action from the virtualization vendor below was applied:

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1556

This link explains how to disable the RARP traffic and after we disabled the RARP, NLB worked fine even if we disable the NLB NIC of the other node.

Author

Suraj Singh

Support Engineer

Forefront Edge Team

Technical Reviewer

Yuri Diogenes

Sr Support Escalation Engineer

Forefront Edge Team