Use Windows PowerShell to Prepare for Migration from SharePoint 2007

Summary: Marc Adam Carter talks about using Windows PowerShell to smooth the way for a large SharePoint 2007 migration.

Microsoft Scripting Guy, Ed Wilson, is here. Today our guest blogger is Marc Adam Carter…

If you’re responsible for administering a SharePoint 2013 or SharePoint 2010 farm, I’d have to say that things are looking pretty good right now with the availability of management tools. The SharePoint administration lawn is seriously greener on these farms.

Let’s face it, even if you haven’t yet migrated from an older platform, such as Microsoft Office SharePoint Server 2007 (MOSS 2007), you’re already likely aware of the library of 500 plus SharePoint-specific cmdlets that are exposed in the SharePoint Management Shell. This proprietary shell is derived from a special base class (SPCmdlet), which is installed on top of the core Windows cmdlets.

The SPCmdlet base class is included in the Microsoft.SharePoint.PowerShell namespace, which gets installed as part of the SharePoint binaries. Although this is less useful information for MOSS 2007 admins, it’s valuable to understand, and something I hope to clarify within this blog post.

So if you are like me, with one foot still in the Microsoft Office SharePoint Server 2007 (MOSS 2007) world, most of your administrative work occurs within the Central Administration pages or by using the STSADM command-line tool.

These tools are sufficient for managing our farm, but we’re preparing to upgrade soon and want to migrate content from our existing environment to our new one. As part of our content migration process, we want to spend a little time cleaning up sites so we don’t simply move stale or unused content into our new farm. We also want to identify inconsistencies that are occurring between content and our governance policy. For example, we require that all libraries and lists include a brief description to help staff identify the content, intent, or purpose of a library or list.

Leveraging the .NET Framework

Unfortunately, neither Central Administration nor STSADM are extremely effective for easily providing the details we’re looking for. Although we don’t have access to built-in SharePoint PowerShell cmdlets in MOSS 2007, we can still reference the .NET Framework and the Microsoft.SharePoint assembly to create our own scripts so that we can produce quick customizable reports.

Before diving into the script I put together, I’m compelled to apologize for it lacking a polished, ready-to-ship quality, which is standard for the level of contributions to this site. As with a large majority of scripts in my personal library, I write with the intent of efficiently accomplishing a goal. I may use a script like this one a couple times, and occasionally refer back to it. But for me, there’s no advantage of rolling it up into a function or module. It may not be the shiniest tool in my Windows PowerShell tool belt, but it’s one of my favorites.

Dependencies

There are two SharePoint requirements for this script to function as expected:

- You'll need to run this script with an account that is a member of the SharePoint administrators group. Members of the SharePoint administrators group have rights to view and manage all sites created on their servers.

- You'll need to use the Microsoft.SharePoint core class. Similarly to what I mentioned earlier, this namespace provides a path for interacting with site collections, websites, lists, and list items. The base assembly is added to a system’s Global Assembly Cache (GAC) when SharePoint is installed. So trying to execute the script from a system without SharePoint installed will result in the following error message, which tells you it couldn’t locate the assembly: “Verify that the assembly containing this type is loaded.”

Initial variable declarations

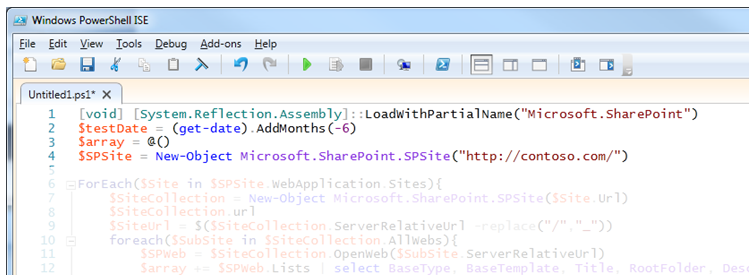

First things first. We need to load the .NET Assembly and define a few initial variables that we’ll use later in the script.

[void] [System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SharePoint")

$testDate = (get-date).AddMonths(-6)

$array = @()

$SPSite = New-Object Microsoft.SharePoint.SPSite("http://contoso.com/")

Because one of the goals is to identify old or stale content, I’m defining a DateTime variable ($testDate) on line #2 to use as a comparison later when I have some objects with dates. The other static configuration is to specify the URL of the web application you want to query. The script will iterate through all site and subsite collections found under this URL.

Drilling into site collections

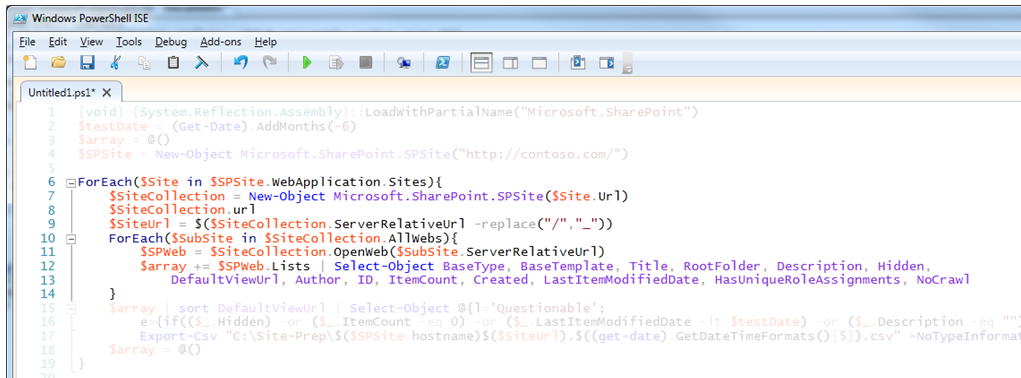

We’ll traverse through the site collections by using a couple nested ForEach loops to drill down to the website level so we can inspect the various objects of the SPList class. Entering the first ForEach loop, we’ll define the top-level site and create a temporary site collection variable ($SiteCollection). This will return all websites within the site collection in the inner ForEach loop by referencing the AllWebs property.

But before entering the inner ForEach loop, we’ll write the URL of the site collection we’re currently inspecting to the screen ($SPSiteCollection.url), so we get some feedback while running the script to see where we’re at.

We’ll also create a string variable ($SiteUrl) that we’ll use when outputting the results to a CSV file later in the outer loop. Within the inner ForEach loop, we’ll define a temporary site variable ($SPWeb) by using the OpenWeb method and passing it the relative path, then returning the website associated with the URL.

We’ve now arrived at the website level, and we can start collecting information about our SharePoint objects (for example, lists, document libraries, and picture libraries). The final step we’ll perform in the inner loop is to populate our array with the selected properties.

ForEach($Site in $SPSite.WebApplication.Sites){

$SiteCollection = New-Object Microsoft.SharePoint.SPSite($Site.Url)

$SiteCollection.url

$SiteUrl = $($SiteCollection.ServerRelativeUrl -replace("/","_"))

ForEach($SubSite in $SiteCollection.AllWebs){

$SPWeb = $SiteCollection.OpenWeb($SubSite.ServerRelativeUrl)

$array += $SPWeb.Lists | Select-Object BaseType, BaseTemplate, Title, RootFolder, Description, Hidden,

DefaultViewUrl, Author, ID, ItemCount, Created, LastItemModifiedDate, HasUniqueRoleAssignments, NoCrawl

}

I select only the properties I’m concerned about for the scope of this task, but after you’ve defined your website variable ($SPWeb), you can use the Get-Member cmdlet to inspect the methods and properties of the SPList class.

Package results and send to CSV file

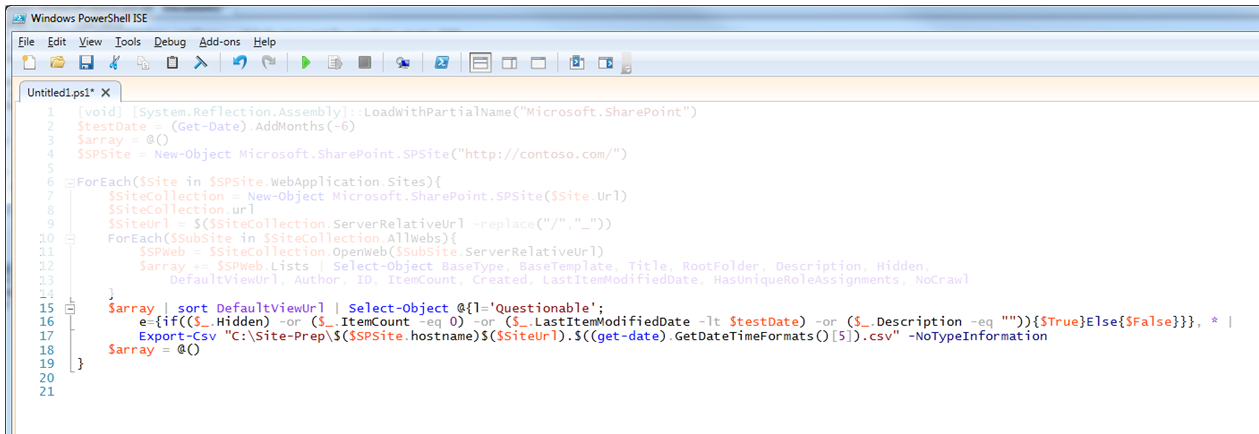

We’ve traveled down to the object level and filled our SharePoint bucket with details about each SPList object. The last stop on this ride is to package those results into a format that is easy to use and one that can be handed off to our site administrators and data owners to consume.

At this point, we’ve exited our inner loop and passed the contents of our array along the pipeline. The last little bit of “trickery” is to include some logic to help identify the problematic objects we’re looking for. To accomplish this we’ll use Select-Object to create a calculated property column labeled “Questionable.”

To build this column, we’ll evaluate a few properties within a hash table to see if they meet our criteria, and indicating that we have a suspect object. After the calculated column, we use the asterisk ( * ) to specify that we want all the other objects from the array. We then pass those objects to Export-CSV. The following table itemizes a breakdown of the evaluation criteria that is used in the calculated column:

|

Evaluation |

Meaning |

|

($_.Hidden) |

Object is hidden |

|

($_.ItemCount -eq 0) |

Object contains no items |

|

($_.LastItemModifiedDate -lt $testDate) |

Object hasn’t been modified since before $testDate |

|

($_.Description -eq "") |

Object has no description |

$array | sort DefaultViewUrl | Select-Object @{l='Questionable';

e={if(($_.Hidden) -or ($_.ItemCount -eq 0) -or ($_.LastItemModifiedDate -lt $testDate) -or ($_.Description -eq "")){$True}Else{$False}}}, * |

Export-Csv "C:\Site-Prep\$($SPSite.hostname)$($SiteUrl).$((get-date).GetDateTimeFormats()[5]).csv" -NoTypeInformation

$array = @()

Because we’ll repeat this process for each site collection within a web application, the script outputs a separate CSV file for each site collection, and lists all the subsites contained within that site collection. This allows us to inspect content for a specific site collection, depending on your topology per content database.

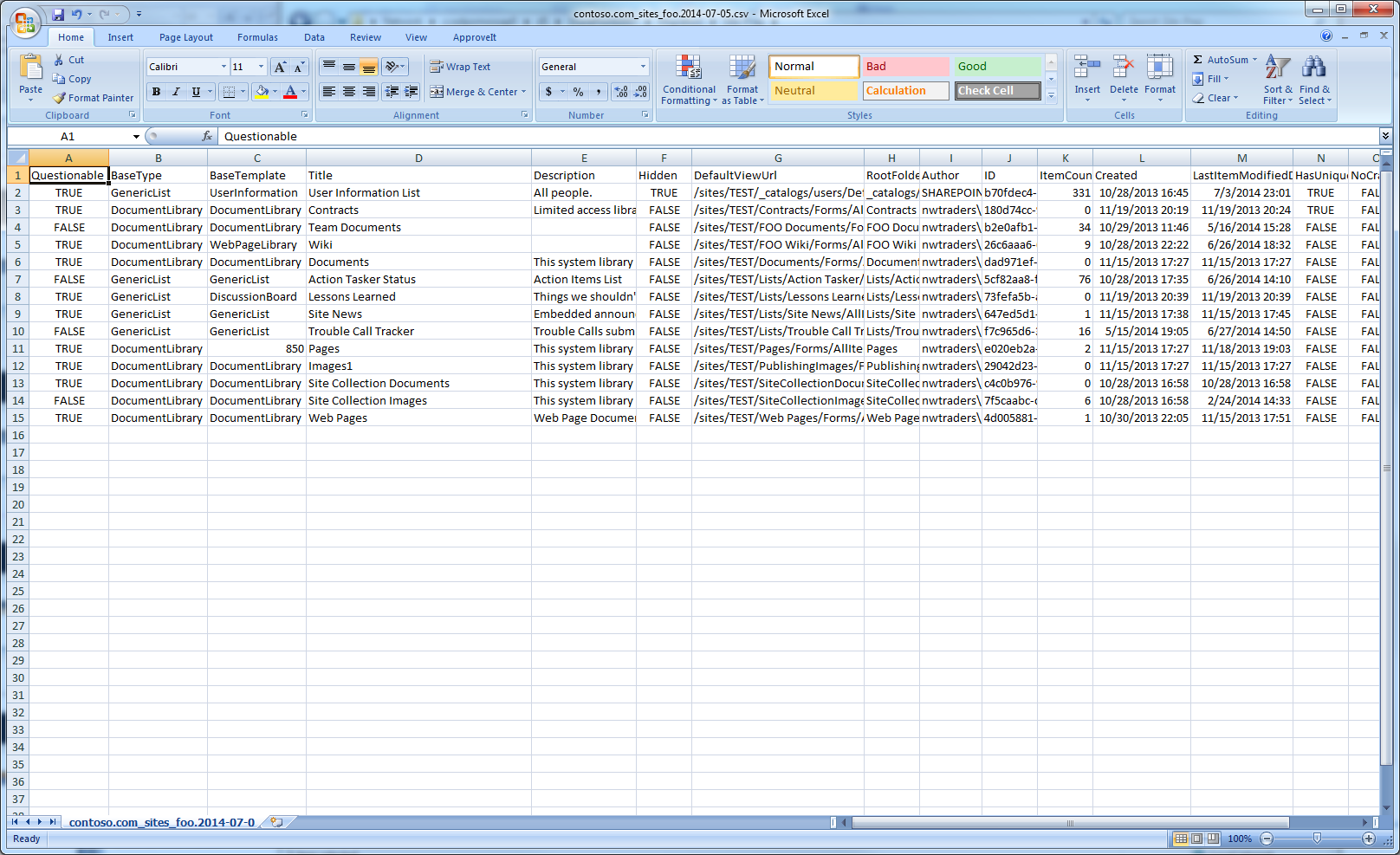

The following image provides an example of one of the CSV files generated by the script. Note the first column is our calculated column, which provides a quick reference to potentially low-hanging fruit.

Following is the complete script:

[void] [System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SharePoint")

$testDate = (Get-Date).AddMonths(-6)

$array = @()

$SPSite = New-Object Microsoft.SharePoint.SPSite("http://contoso.com/")

ForEach($Site in $SPSite.WebApplication.Sites){

$SiteCollection = New-Object Microsoft.SharePoint.SPSite($Site.Url)

$SiteCollection.url

$SiteUrl = $($SiteCollection.ServerRelativeUrl -replace("/","_"))

ForEach($SubSite in $SiteCollection.AllWebs){

$SPWeb = $SiteCollection.OpenWeb($SubSite.ServerRelativeUrl)

$array += $SPWeb.Lists | Select-Object BaseType, BaseTemplate, Title, RootFolder, Description, Hidden,

DefaultViewUrl, Author, ID, ItemCount, Created, LastItemModifiedDate, HasUniqueRoleAssignments, NoCrawl

}

$array | sort DefaultViewUrl | Select-Object @{l='Questionable';

e={if(($_.Hidden) -or ($_.ItemCount -eq 0) -or ($_.LastItemModifiedDate -lt $testDate) -or ($_.Description -eq "")){$True}Else{$False}}}, * |

Export-Csv "C:\Site-Prep\$($SPSite.hostname)$($SiteUrl).$((get-date).GetDateTimeFormats()[5]).csv" -NoTypeInformation

$array = @()

}

I hope you find this information useful and that it helps you with your SharePoint migration. Cheers!

~Marc

Thank-you, Marc, for your knowledge and time and for sharing with our readers.

I invite you to follow me on Twitter and Facebook. If you have any questions, send email to me at scripter@microsoft.com, or post your questions on the Official Scripting Guys Forum. See you tomorrow. Until then, peace.

Ed Wilson, Microsoft Scripting Guy

Light

Light Dark

Dark

0 comments