Server Virtualization Series: Networking – NIC Teaming (Part 10 of 20) by Harold Wong

As you start virtualizing more and more of your server infrastructure, network bandwidth (and networking in general) become more and more important to the overall design and architecture of your virtualization strategy. Towards that goal, I want to get a little more into NIC Teaming in Windows Server 2012 and make sure we all understand how it can help and when it makes sense to use this feature.

First, a quick look back at NIC Teaming prior to Window Server 2012. In Windows Server 2008 R2 and prior, if you wanted to take advantage of NIC Teaming, you would have to ensure you purchase the “correct” networking adapters that had direct support from the vendor for NIC Teaming. In other words, the NIC Teaming driver came from the vendor and not from Microsoft. There are pros and cons to this (just like everything else). One of the “cons” is that if you had a problem with this feature, you could not really get support from Microsoft since that was a vendor specific driver and feature and not something baked into Windows Server.

In Windows Server 2012, we now have native support for NIC Teaming which is enabled at the Operating System level. A nice benefit of this is you can actually team multiple NICs from multiple vendors in a single team since Windows Server 2012 handles the teaming. For those of you who are not familiar with NIC Teaming, you may be asking “What exactly is NIC Teaming and why do I care?”.

NIC Teaming is also known as Load Balancing and Failover (LBFO) which allows you to take two or more physical NICs and create one logical NIC such that the network traffic is load balanced between the NICs and if one should fail, the traffic is automatically rerouted through one of the other functioning NICs. There are a couple of key “restrictions” / requirements that you need to keep in mind. I am listing them below.

- NIC Teaming is not compatible with the following features:

- SR-IOV: This feature enables data to be delivered directly to the NIC and therefore does not pass through the networking stack which is required for NIC Teaming to deal with the traffic.

- RDMA: Just like SR-IOV, this feature enables data to be delivered directly to the NIC and therefore does not pass through the networking stack which is required for NIC Teaming to deal with the traffic.

- Native host Quality of Service (QoS): Since QoS is setting bandwidth limitations at the host level, this would reduce the overall throughput through a NIC Team.

- TCP Chimney: This feature offloads the entire networking stack to the NIC so just like SR-IOV and RDMA, there’s no way for the OS to interact with the network traffic.

- 802.1X Authentication

- The NICs should be on the WHQL.

- The NICs have to be Ethernet based so WWAN, WLAN, Bluetooth and Infiniband are not supported.

- You can have up to 32 NICs in a Team.

- If a NIC Team will be used with a Hyper-V VM, the Team can only have two members (for a supported configuration).

- Teaming of NICs with different speed connections is not supported.

- You can have multiple NICs in a team that support different speeds, but they should be running at the same speed (e.g. 2 100 MB NICs and 1 1GB NIC that is running at 100 MB).

- You can use a different speed NIC as the standby NIC

It is also good to understand the Teaming modes supported (Switch Independent, Static Teaming and LACP) along with the Load balancing modes (Address Hash and Hyper-V Port).

Switch Independent: The switch is not required to participate in the teaming. This allows the different adapters in the NIC Team to be connected to different switches if you so wish.

Static Teaming (Switch Dependent): The switch is required to participate in the teaming. The switch and the host is configured statically to define which adapter is plugged into which switch port. You MUST ensure you have the cables plugged in according to the configuration or you will have issues.

LACP (Switch Dependent): The switch is required to participate in the teaming. Unlike Static Teaming, LACP (Link Aggregation Control Protocol) is a dynamic configuration that allows the switch to automatically create the team based on the link it identifies dynamically.

Address Hash: Creates a hash based on the address components of the packets (Source and destination MAC addresses, Source and destination IP addresses, or Source and destination TCP ports and source and destination IP addresses) and assigns that to one of the adapters.

Hyper-V Switch Port: This uses the VMs MAC address or the port on the Hyper-V switch that the VM is connected to as the basis for dividing traffic.

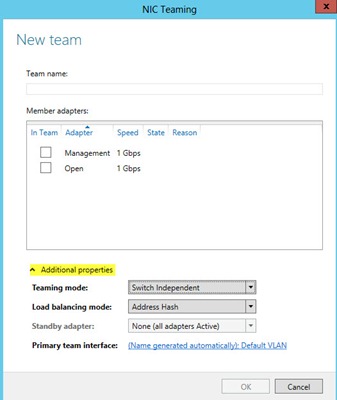

These settings can be modified from the GUI or from PowerShell. The below screenshot shows how to modify from the GUI. Admittidely, I am showing a screenshot of the New NIC Teaming Wizard, but the key here is that you would go to the properties of a given NIC Team and expand the Additional Properties section (or do that when creating the NIC Team).

If you want to use PowerShell, you would use the Set-NetLbfoTeam Cmdlet with the –TeamingMode switch (-TM for short). To configure the Load Balancing mode, you would use the –LoadBalancingAlgorithm switch (-LBA for short).

For example: If I have a NIC Team named NICTeam1 that I wanted to change the Teaming mode to LACP and the Load Balancing mode to Hyper-V Port, I would execute the following PowerShell command:

Set-NetLbfoTeam NICTeam1 –TM LACP –LBA HyperVPorts

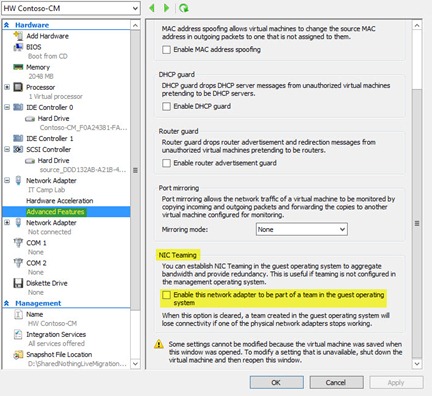

I also want to point out that you can also create NIC Teams within a VM, but you are limited to Switch Independent with Address Hash. Also, each NIC must be connected to a different Hyper-V switch for a supported configuration. The nice thing is you can still perform Live Migrations of these VMs. If you are going to enable NIC Teaming within the VM, you MUST configure the VM settings appropriately. You can either configure this from the GUI (VM Settings) or from PowerShell. The below screenshot shows the GUI location.

To configure this setting from PowerShell, you would execute the following command:

Set-VMNetworkAdapter –VMName <VM Name> –AllowTeaming On

The last thing I want to touch on in my article is the use of VLANs with NIC Teaming.

- When using NIC Teaming, the physical switch ports that the NICs connect to should be set to trunk (promiscuous) mode.

- Do not set VLAN filters on the NICs advanced properties settings.

- For Hyper-V hosts, the VLAN configuration should be set in the Hyper-V Switch and not as part of the NIC Teaming configuration

I’m sure there is a lot more that I can write about regarding NIC Teaming, but I think the above is a very good start.

Harold Wong