US IT Pro Evangelist build out of Private Cloud (ITProsRock.com) Documentation

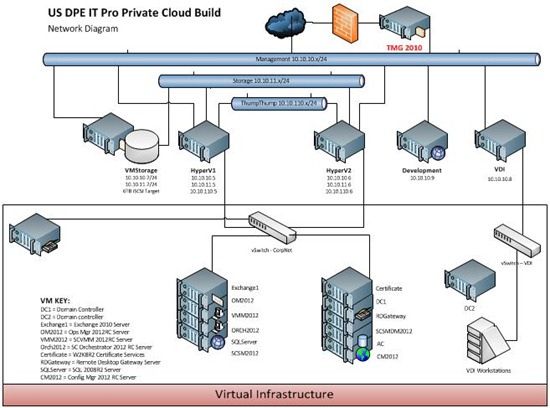

At the end of September, the Field based IT Pro Evangelists in the US got together and worked to build out our Microsoft Private Cloud solution in our lab. We called it ITProsRock.com. The environment consists of 5 Dell R710 Servers. We virtualized all the core infrastructure servers and all the management servers (System Center 2012). It is based on System Center 2012 RC code as of right now, but will be updated to RTM code as well as incorporating Windows Server 2012. Different members of the team have created blog posts over the last 6 months with some of the details of the lab. One of the things we never did was post the documentation that we created based on that. I wanted to go ahead and post that up. I am including some of the information from the document inline in this post, but you can download the Word document from here: https://aka.ms/hwitprosrockdoc.

Executive Summary

We built a private cloud for a number of reasons:

- Give the ITE’s a “Real World” experience building a private cloud using Microsoft technologies.

- Provide a platform for demonstration for live events directed at the IT Pro Audience

- Build an infrastructure that can be extended to the entire DPE organization for support and hosting of online applications etc.

- Create a lab environment for ongoing testing of VDI, SCVMM, Self Service Solutions, Workflow tools, Automation, Public Cloud Connectivity, and other as yet emerging or unknown cloud based technologies.

A company named Groupware in Silicon Valley will host the Private cloud environment. Groups of 3 or ITE’s will travel to Silicon Valley to participate in the build. Each group will start from scratch and build the hardware and software infrastructure needed for a Public Cloud operation. Each group will use the documentation from the group before as a base to build from, and each group will edit and change this living document to reflect changes or updates that will make the build more effective or efficient. When the process is completed the document will be used as an education tool for the IT Pro Audience. It is expected that the ITE participants will also likely blog, tweet, and gain other broad reach opportunities related to this effort.

The Hardware

Five Dell R710 Servers with dual Intel Xeon L5640 6 core processors and 48 GB of RAM. W e used 2 TB 7200 RPM SATA drives for storage due to budget constraints. The servers were configured as follows:

Each Physical Server will run Window Server 2008 R2 SP1 Enterprise Edition. To get the Dell R710’s to boot from thumb drive, when POST make sure you press F11 at the right time to get to boot sequence. Press down 3 times I think to get choice HD, or USB.

Also to change hardware configure of the Hard Drives in the servers you need to press CTRL-R at the right time to get into the raid configuration utility. We configured all the serves to have a 200 GB Logical Partition for the OS and the remaining drive space as a as a second logical drive. This second partition will vary depending on the server (VMSTORAGE and VDI has more space than the others).

Order of Installation

In this lab environment, we started from ground zero with no infrastructure. Since we have limited hardware resources, we are virtualizing all core aspects of our infrastructure (Domain Controllers, application servers, management servers, etc.). Since Microsoft clustering requires the nodes to be a member of Active Directory, we had to get our Domain Controllers installed and up and running in Virtual Machines prior to doing anything else. Following is a quick summary of the order of installation of the servers.

- Install Windows Server 2008 R2 SP1 on all five physical hosts

- Install Hyper-V Role on all five servers

- On VDI, create VMs for DC1 and DC2

- Install Windows Server 2008 R2 SP1 on DC1 and DC2 and configure Active Directory appropriately

- Join HYPERV1, HYPERV2 and VMSTORAGE to AD

- Install and configure iSCSI Target software on VMSTORAGE to expose iSCSI shared storage

- Configure iSCSI Initiator on HYPERV1 and HYPERV2 to connect to VMSTORAGE

- Install and configure Microsoft Clustering on HYPERV1 and HYPERV2

- Shutdown DC1 on VDI and export

- Copy DC1 to Cluster and import

- Make DC1 Highly Available and start VM

- Join VDI to AD

- Build out rest of infrastructure

I made the mistake of creating the first Virtual Machine with a C Partition of 250 GB and using that VHD as the basis to build all the other servers. These VHD files are dynamically expanding so from an actual disk utilization perspective, the majority of these are taking 15 GB or less of actual disk space. Also, when building out the Windows 7 VMs, I also accepted the default C Partition size of 127 GB and used the first VHD as the basis for creating all the remaining Windows 7 VMs.

System Center 2012 Virtual Machine Manager is smart enough to know that it is a bad thing if we actually run out of disk space on the storage unit. When using VMM to deploy VMs to the cluster, it does not look at actual disk space in use by the VHD, but the maximum possible space that might be used. In our case, this is 250 GB per Server VHD. As configured, every four Server VMs requires 1 TB of disk space. We have more than 16 VMs deployed to the cluster so as far as VMM is concerned, we are out of disk space since it has to accommodate for maximum growth of the VHD.

Lesson learned in this is that we should create the C Partition of these VHDs to be as minimal as possible (such as 20 GB) and then attach a secondary drive for those VMs that need more storage for the application being installed (such as SQL Server).

Another mistake we initially made was that we put both DC1 and DC2 into the cluster and made them both highly available. When the entire environment needed to be shutdown for rack reconfiguration, we were in a “chicken before the egg” scenario. The Cluster service could not start until it authenticated with a domain controller. Our two domain controllers could not start until the cluster service started. We were in some deep trouble at this time. It took us (me and Kevin Remde) a few days remotely to get everything back up and running.

One final lesson learned is that I should have paid closer attention and purchased the enterprise iDRAC from Dell for the five servers we purchased. I did not realize the iDRAC that came by default does not allow console connections once the OS starts. Also, the Belkin IP KVM I purchased does not support Windows Server 2008 R2 (found out afterwards) and the mouse control does not work correctly from a remote session.

Harold Wong