Using PowerShell to Measure Page Download Time

I was recently working with a customer that reported some performance issues in their SharePoint 2013 proof of concept environment, specifically page render time was very poor. I wanted to establish exactly how bad this was and also put in place some basic automated tests to measure response times over a short period of time. I put together a very quick script that measures how long a specific page takes to download and then repeats the test x number of times, as this only measures page download time it doesn't include any client side rendering therefore it isn't wholly accurate, but is a good starting point for assessing performance.

Below is the script itself, it takes two parameters: -URL, which is the URL of the page to download and -Times, which is the number of times to perform the test. By default the script uses the credentials of the current logged on user to connect to the URL provided.

param($URL, $Times)

$i = 0

While ($i -lt $Times)

{$Request = New-Object System.Net.WebClient

$Request.UseDefaultCredentials = $true

$Start = Get-Date

$PageRequest = $Request.DownloadString($URL)

$TimeTaken = ((Get-Date) - $Start).TotalMilliseconds

$Request.Dispose()

Write-Host Request $i took $TimeTaken ms -ForegroundColor Green

$i ++}

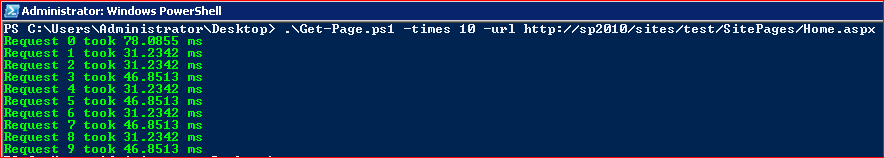

To run the script, copy the commands above into a text editor, save as a .ps1 file and run from a suitable machine. An example of the script in action can be found below.

Brendan Griffin