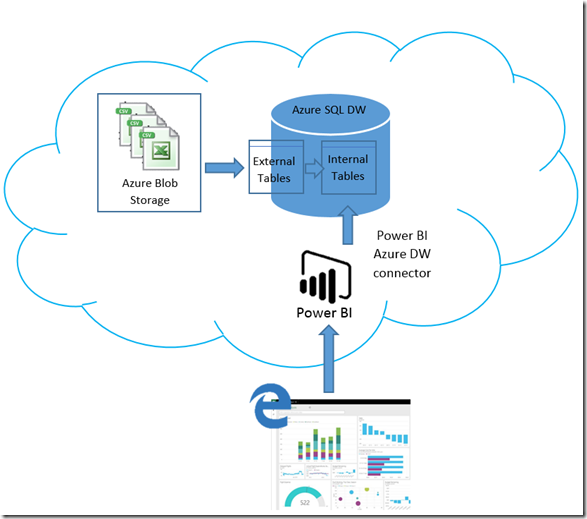

SQL Azure Data Warehouse and Power BI

In this article we will described the architecture we used during a project. It was the first time for us, Benjamin Guinebertière (@benjguin) and I, we used the new solution Azure SQL Data Warehouse .

The aim of this project is to integrate a large amount of data and propose a solution to create dashboard and reporting on top of it.

We explored several paths and we also have a lucky early access to Azure SQL Data Warehouse and we decided to test it ![]() . Finally our tests were conclusive and we decide to use it. Azure SQL Data Warehouse includes what we call PolyBase. With PolyBase, we will have the capacity to query across both relational and non-relational data with T-SQL command. This will be useful to integrate data from CSV files.

. Finally our tests were conclusive and we decide to use it. Azure SQL Data Warehouse includes what we call PolyBase. With PolyBase, we will have the capacity to query across both relational and non-relational data with T-SQL command. This will be useful to integrate data from CSV files.

And last but not least, with the general availability of Power BI, a native Azure SQL Data Warehouse connector is provided with our new BI solution.

So Benjamin and I present in this article a full Azure Cloud Data solution that involved Azure storage, Azure SQL Data Warehouse and Power BI.

Architecture Overview

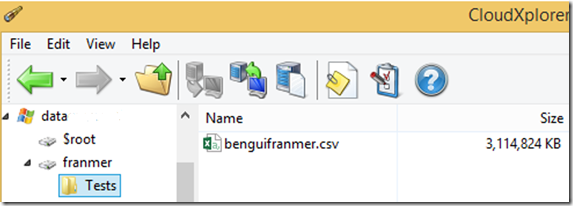

CSV Files

We stored our files in an Azure blob storage. In our sample, file are stored in the “franmer” container and we only use one big file (17GB). Note that it’s better to have several files less than 1GB than one big file to use the capability of parallel processing with Azure SQL Data Warehouse[BG1]

(from https://azure.microsoft.com/en-in/documentation/articles/sql-data-warehouse-load-with-polybase/ As a best practice, break your Azure Storage data into no more than 1GB files when possible for parallel processing with SQL Data Warehouse.)

Azure SQL Data Warehouse

Details are available via this link:

https://azure.microsoft.com/en-us/documentation/articles/sql-data-warehouse-load-with-polybase/

Below are the steps to prepare the database and load data

Create a database scoped credential

First of all, we need to have an access to our Azure Blob storage. To do that, we need to create a Master Key and a database scope credential.

Execute the script below to create the database scope credential. The identity name doesn’t affect authentication to Azure Storage

CREATE MASTER KEY;

CREATE DATABASE SCOPED CREDENTIAL myAzureStorageCredential

WITH IDENTITY = 'Funky’,

SECRET = ‘myazurestoragekey==’;

NB: the credential object in SQL Server is quite generic. It has an identity and a secret. In the case of Azure storage, the identity field will not be used. So you can use whatever you like. Funky seems good!

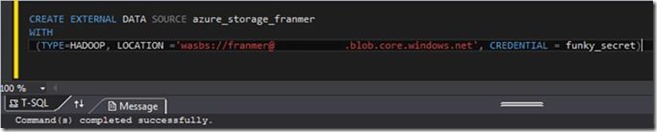

Create an external data source

As our csv files are stored on a blob storage, we have to define an external data source for our storage container. In this sample, files are in franmer container.

CREATE EXTERNAL DATA SOURCE azure_storage_franmer

WITH

(TYPE=HADOOP, LOCATION ='wasbs://franmer@myazurestorage .blob.core.windows.net', CREDENTIAL = myAzureStorageCredential)

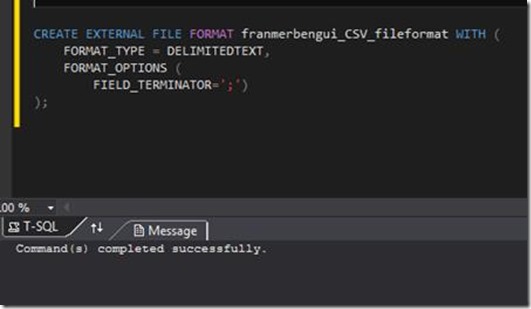

Create an external file format

In our case, we worked with csv files, with “;” for field separator. Below, the script to set the object database external file format object database:

CREATE EXTERNAL FILE FORMAT franmerbengui_CSV_fileformat WITH (

FORMAT_TYPE = DELIMITEDTEXT,

FORMAT_OPTIONS (

FIELD_TERMINATOR=';'));

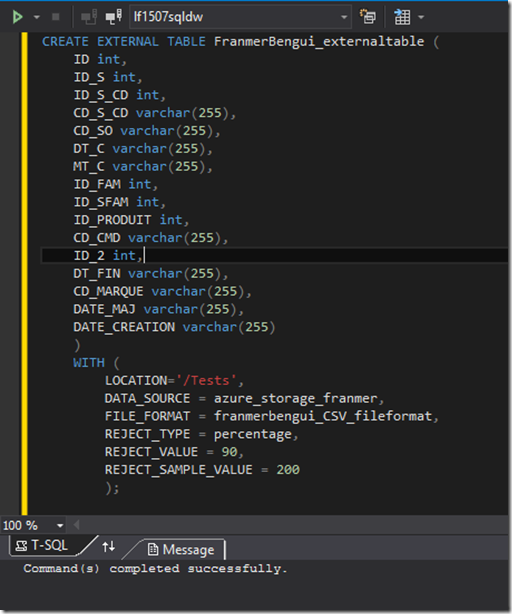

Create an external table

Now we need to create an external table. The external table definition is stored in SQL DW but the data is stored in the location specified by the data source

CREATE EXTERNAL TABLE FranmerBengui_externaltable (

ID int,

ID_S int,

ID_S_CD int,

CD_S_CD varchar(255),

CD_SO varchar(255),

DT_C varchar(255),

MT_C varchar(255),

ID_FAM int,

ID_SFAM int,

ID_PRODUIT int,

CD_CMD varchar(255),

ID_2 int,

DT_FIN varchar(255),

CD_MARQUE varchar(255),

DATE_MAJ varchar(255),

DATE_CREATION varchar(255)

)

WITH (

LOCATION='/Tests',

DATA_SOURCE = azure_storage_franmer,

FILE_FORMAT = franmerbengui_CSV_fileformat,

REJECT_TYPE = percentage,

REJECT_VALUE = 90,

REJECT_SAMPLE_VALUE = 200

);

If you want more details on how to create an external table, you can follow the link below:

https://msdn.microsoft.com/en-us/library/dn935021.aspx

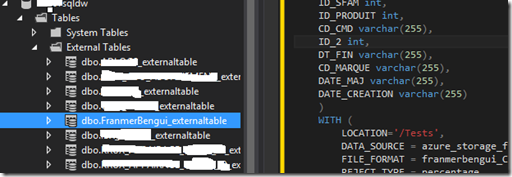

In visual studio, if we have a look in the “External Tables”, we can see our tables.

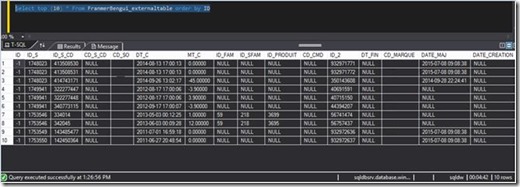

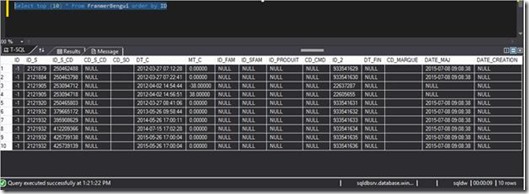

Test of the external table

We decide to run a simple query to test the performance of the external table

Select top (10) * From FranmerBengui_externaltable order by ID

Near than 5 minutes for a top 10 over 3Gb+ files. It could be better. Even a second run of the query gave the same result.

So we decide to insert the data from the external table to a classic table in Azure SQL Data Warehouse. Here the magic PolyBase will help us ![]() !

!

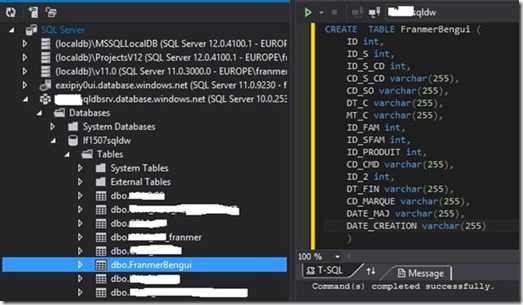

Create table into Azure SQL Data Warehouse

Before integrating data from CSV files to Azure SQL DB, we need to create regular tables.

CREATE TABLE FranmerBengui (

ID int,

ID_S int,

ID_S_CD int,

CD_S_CD varchar(255),

CD_SO varchar(255),

DT_C varchar(255),

MT_C varchar(255),

ID_FAM int,

ID_SFAM int,

ID_PRODUIT int,

CD_CMD varchar(255),

ID_2 int,

DT_FIN varchar(255),

CD_MARQUE varchar(255),

DATE_MAJ varchar(255),

DATE_CREATION varchar(255)

)

Note that the field definitions are exactly the same as for the external table.

And now we just do an INSERT INTO from the external table to the SQL table

insert into franmerbengui

select * from franmerbengui _externaltable;

More than 29 million rows integrated in less than 6 minutes

Now we test again to top 10 query against the internal table

Less than 10 seconds! Better ![]() !

!

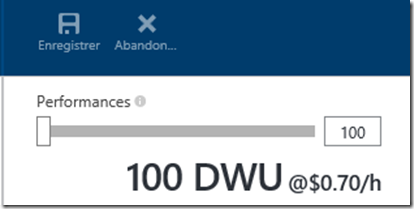

Performances

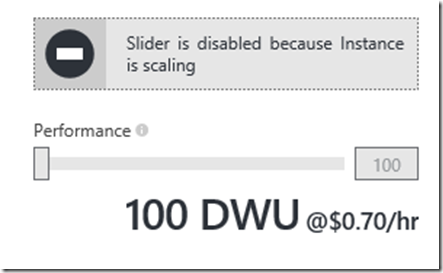

As Azure SQL Data Warehouse is an Azure service, it’s possible to scale the service. We start with the minimum and we worked most of the time with 100 DWU level. But when we needed to fire important query, we scale to upper performance level.

To scale it’s quite easy. Just go in the portal and use the slicer to select the performance needed.

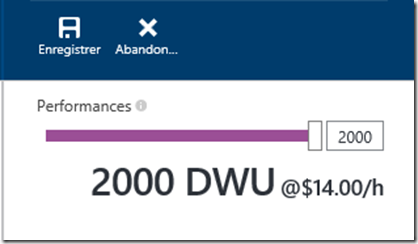

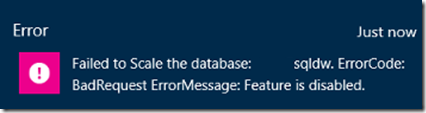

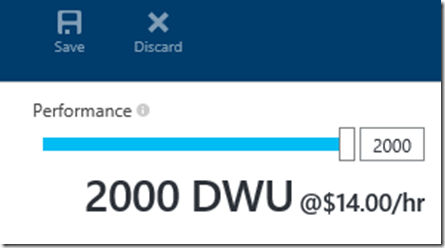

But just saw a little bug when we tried to scale up to 2000 DWU

But we get an error: “Failed to Scale the database”. “BadRequest ErrorMessage: Feature is disabled.”

The easy way to work around this problem until is fixed, is to use the following T-SQL script against the MASTER database:

ALTER DATABASE MyDw MODIFY (EDITION = 'DataWarehouse', SERVICE_OBJECTIVE = 'DW2000')

If we have a look on the portal, the service is scaling.

After 2 or 3 minutes we have the new scale

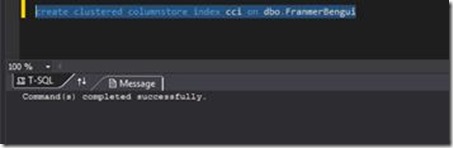

Of course, we can create a clustered columnstore index on tables if needed.

We loaded several tables in Azure SQL Data Warehouse. 2 of them are quite big:

- 1 table with 17 394 948 rows

- 1 table with 29 432 135 rows

The query below runs 12 times fasters at 2000 DWU (we talk about seconds to execute this query)

select top 50

ca.cd_source, ca.id_source, ca.cd_marque, ca.id_crm, count(*) as nb

from crm_optin_franmer oi inner join crm_ca_franmer ca

on oi.cd_source = ca.cd_source

and oi.id_source = ca.id_source

and oi.cd_marque = ca.cd_marque

and oi.id_crm = ca.id_crm

group by ca.cd_source, ca.id_source, ca.cd_marque, ca.id_crm

order by nb desc

Pausing the service

One of advantages with Azure SQL Data Warehouse, is the ability to pause and resume compute when needed. For instance, you can pause the service during weekends. The pause action returns your compute resources back to the pool of available resources in the data center and the resume action acquires the necessary compute resources needed for the DWU you've set and assigns them to your Data Warehouse instance.

You can pause au resume the service through the Azure portal, via REST APIs or with Powershell.

Pause the service:

Suspend-AzureSqlDatabase –ResourceGroupName "ResourceGroup11" –ServerName

"Server01" –DatabaseName "Database02"

Resume the service:

Resume-AzureSqlDatabase –ResourceGroupName "ResourceGroup11" –ServerName "Server01" –DatabaseName "Database02"

Another advantage of Azure SQL Data Warehouse is that compute and storage are billed separately. It means that when the service is paused, just the storage is billed. If you want more details on how pricing works, you can have a look on this page: https://azure.microsoft.com/en-us/pricing/details/sql-data-warehouse/

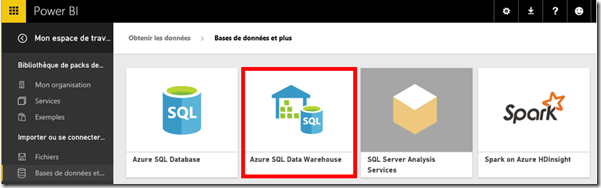

Reporting with Power BI portal

Now that we had our database, the aim is to be able to use the new Power BI portal to create reports. The new portal propose 2 connectors:

- Analysis service connector (for tabular cube)

- Azure SQL Data Warehouse connector

Although we tested both solutions, we discuss on this article about the second one. For the cube, we created an Azure VM and we just created a tabular cube connected to the SQL DW. But it adds a layer, and the performance of Azure SQL Data Warehouse let us think that we can directly query the database.

Create connection to Azure SQL Data Warehouse

On the new portal we have the possibility to connect to Azure SQL Data Warehouse (as well as Analysis Services Tabular)

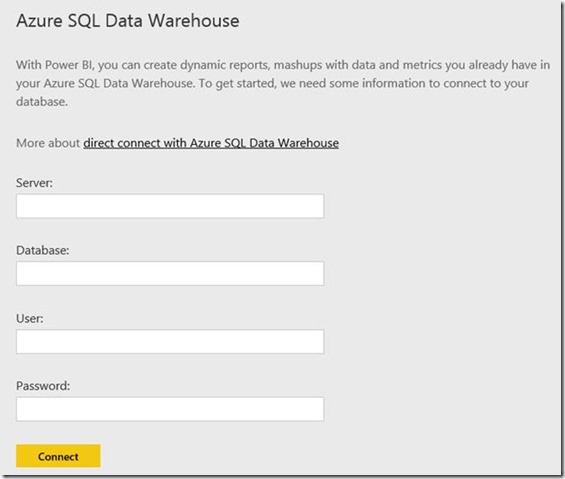

To connect to Azure SQL Data Warehouse, just fill the connection form:

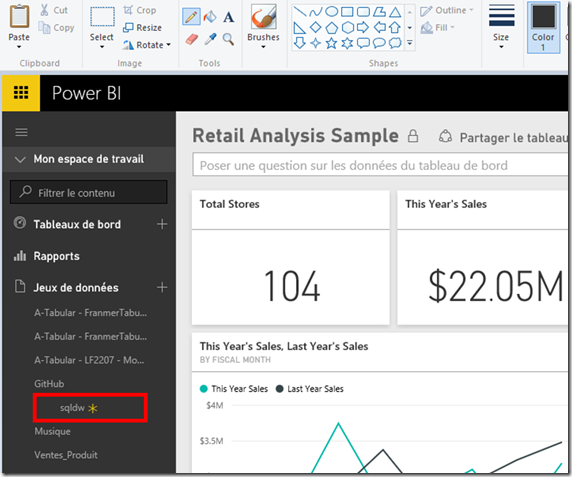

And the database will be available in the dataset section of Power BI.

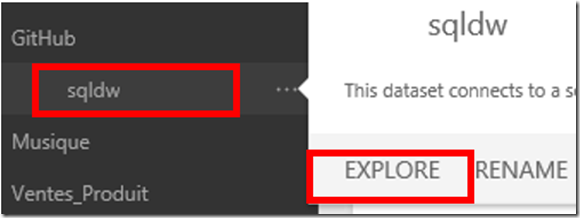

Just click on the 3 dot near the name of your database and click on “Explore”

Explore the database

Via the Power BI portal, we are able to query all the tables in our Azure SQL Data Warehouse. The response time on a table with near 30 million rows is around 6 seconds for the first hit, and near 2 seconds when we start to add value or categories.

Of course, now we have to define the structure in Azure SQL Data Warehouse to allow user to query easily the database. But with this solution we have a real short path from raw data to Power BI to create Dashboard and reports on top of huge amount of data. And if needed, the cube solution is, of course, still possible. We still investigate the BI and we heard that cool new features will coming soon. So….. Stay tuned ![]() !

!

Sessions de formation gratuites :

-

- Microsoft Virtual Academy : https://www.microsoftvirtualacademy.com/

Pour tester Windows Server 2012, Windows 8, SQL Server 2012 et SQL Server 2014 CTP2, vous pouvez télécharger gratuitement la version d’évaluation disponible sous la forme :

Windows Server 2012 :

-

- d'une image ISO : https://aka.ms/jeveuxwindows2012

- d'un fichier VHD avec un système préinstallé : https://aka.ms/jeveuxwindows2012

Evaluation SQL Server 2014 :

Evaluation SQL Server 2016 CTP2 :

Evaluation Power BI :

Testez Azure gratuitement pendant un mois :