SQL Azure DW – What is it? How it works?

Azure DW what is it? Is it the same as SQL Azure but bigger?

No. It is kind of…different.

It is a full Cloud Service from Microsoft in the Microsoft Cloud (PaaS), multi-tenant, cloud-based data warehouse ‘Platform as a Service’ offering which elastically scales on demand.

Separates storage and compute for elastic scale requirements regardless of data volume.

You could pause\resume approach for ad-hoc workloads. Summarizing: Azure DW or Cloud Data Warehouse it is a high-end massively parallel processing (MPP) data warehousing, ideal for high-scale or high-performance data marts and EDWs in the Cloud.

So, we are talking about massively parallel processing. How it works?

It is “divide to conquer”. Azure DW relays in nodes and CPUs, instead in only CPUs to process a task. Our classical SQL divides a task in different CPUs, that is parallel processing. Azure DW process a task in CPUs running in different nodes (computers).

In order to achieve this distributed architecture DW has:

Control node: The Control node manages and optimizes queries. It is the front end that interacts with all applications and connections.

Compute nodes: The Compute nodes serve as the power behind SQL Data Warehouse. They are SQL Databases that store your data and process your query

Storage: Your data is stored in Azure Blob storage. When Compute nodes interact with your data, they write and read directly to and from blob storage

(/en-us/azure/sql-data-warehouse/sql-data-warehouse-overview-what-is)

In terms of query that represents segmented data and consequently data movements (DMS). But what that means?

About SQL DW it is important to have in mind that the data will be spread across node according to distribution columns. So, choosing a good distribution column is an important aspect of achieving storage efficiency and highly performant queries.

Again, as the data is distributed against the nodes, SQL DW can perform some data movement to return the result set, the one responsible for that is the Data Movement Service. Data movement is the way which a query plan operation moves data among the nodes. When this happen, data is always moved into temporary tables. Moving data ensures that data exists on the correct nodes at the correct time to run queries.

Ok. Distribution columns?? What it means?

So first let’s make it clear some key concepts:

Distribution columns:

Behind the scenes, SQL Data Warehouse divides your data into 60 databases. Each individual database is referred to as a distribution. When data is loaded into each table, SQL Data Warehouse has to know how to divide your data across these 60 distributions. (/en-gb/azure/sql-data-warehouse/sql-data-warehouse-tables-distribute)

So the column choose as the distribution key will be use to distribute the data across nodes.

We have two types of distribution:

- Round robin which distribute data evenly but randomly. As the it sounds round robin will work distributing the data in round robin fashion, when the data is loaded, each row is simply sent to the next distribution. For this reason, Round-Robin tables often make good loading targets, but maybe not so good for queries, requiring data movement.

- Hash Distributed which distributes data based on hashing values from a single column. Hash distributed tables are tables which are divided between the distributed databases using a hashing algorithm on a single column which you select. The hashing algorithm and resulting distribution is deterministic.

Note: to simulate this demo, you will need: an azure account, a SQL DW DB and SSMS studio connected to your DW DB.

More details:

SSMS and azure DB: /en-us/azure/sql-database/sql-database-connect-query-ssms

Create SQL DW Azure: /en-us/azure/sql-data-warehouse/sql-data-warehouse-get-started-provision

T-SQL Code demo – Creating a table using Hash and Round Robin.

Hash

[sql]

CREATE TABLE Hash_example

(

id int NOT NULL,

lastName varchar(20),

Postcode varchar(6)

)

WITH

(

DISTRIBUTION = HASH ( id ) --hash distribution. Note only one column can be used

CLUSTERED COLUMNSTORE INDEX

)

;

[/sql]

Round Robin

[sql]

CREATE TABLE RoundRobin_example

(

id int NOT NULL,

lastName varchar(20),

Postcode varchar(6)

)

WITH ( CLUSTERED COLUMNSTORE INDEX );

[/sql]

Why that matters? If you join two tables using different distributed columns it could lead to a data movement and that could lead to performance issues.

Speaking in data movement...Some types of Data Movements:

BROADCAST_MOVE - This operation is frequently used when running a distribution incompatible join.

To perform this operation, each distribution broadcasts its rows to all other Compute nodes. The result is that each Compute node uses its own rows plus all the rows it receives from the other Compute nodes to create a replicated table.

SHUFFLE_MOVE - Redistributes a distributed table. The redistributed table has a different distribution column than the original distributed table. This might be used to when running incompatible joins or incompatible aggregations.

To perform this operation, SQL DW will moves each row to the correct Compute node according to distribution column of the destination table. Rows that are already stored on the correct Compute node are not copied during this operation.

How to identify a data movement? Look at the execution plan, use Explain.

Example:

[sql]

EXPLAIN --show the cost of the execution plan

SELECT COUNT(*)

FROM dbo.Hash_example HS

INNER JOIN RoundRobin_example RR

on HS.id = RR.id

[/sql]

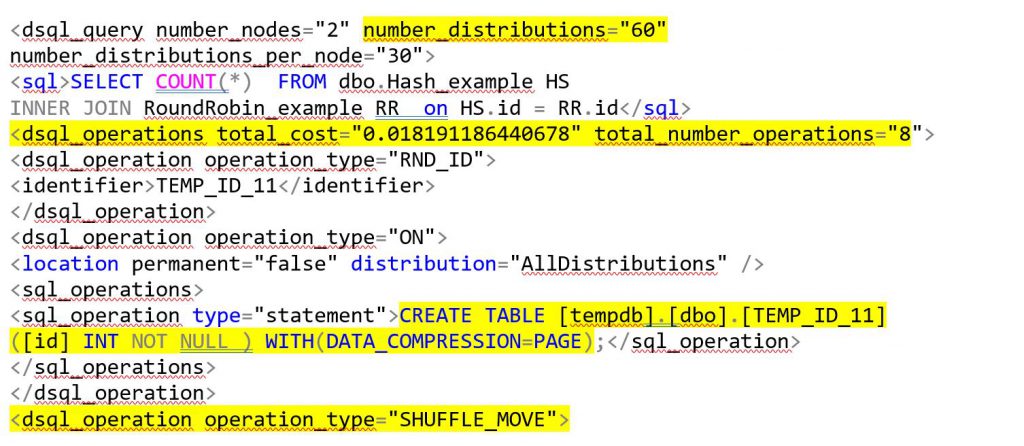

For the example above, I used Explain. Explain returns the query plan for a SQL Data Warehouse SQL statement without running the statement. In this case I have 2 tables distributed on the same column with the same datatype, so it seems everything will work as it should, but if I check the XML result for my execution plan I will realize Data movement service (DMS) had to move the data to a temporary place so that means a distribution enters in the scene. But which distribution and why? To discover the distribution, look the operation type inside of the XML ( more details, figure 1), and the reason is because I am using round robin in one of the tables and hash in the other. Remember round robin distribution in the table occurs just as the name says in a round robin fashion, so it was chosen to perform some data movement to execute the join between the columns. When you choose to use Round Robin increases the like hood of data movement.

Check more about explain: (/en-us/sql/t-sql/queries/explain-transact-sql)

Between other things what is most notable in the explain result for the query mentioned is the data movement and the query cost.

Check the results as follow:

Figure 1: Explain Query DW

Note: The data was moved to a temporary place (TEMP_ID_11) to execute the query, that means SQL has to distributed the data in order to return the result set. The operation chosen to perform this distribution was Shuffle move, you can check this inside of the operation type in the XML result, above. The number of distribution are 60. Also, note the cost for this query and operations required can be checked by the explain result.

Next post we will discuss more reasons for data movements and some possible solutions.

Liliam Leme

UK Data Platform PFE