How the CREATE_NEWKEY switch on MOM.msi saved my hide

Not too long ago our team embarked on the journey of migrating all of our clustered root management servers to new hardware. These were exciting times and in retrospect things went much smoother than we had anticipated. With that said, the experience was not without its hiccups and this is the story of one such instance and how the /CREATE_NEWKEY parameter on MOM.msi saved us from a very labor intensive alternative. I want to share this story for two reasons:

- Get the word out: Prior to this I had no idea that the CREATE_NEWKEY parameter existed and internet searches still show its not well indexed, which I assume means its not well known.

- Fill in some details: The few links that do exist on the matter (the KB article The SMS&MOM blog post) don't talk about what to do if your RMS is clustered. As a result there was at least one step where we had to make an educated guess (i.e. take a leap of faith)

How did we get here anyhow?

Our general approach for hardware migration of the RMS role was to first migrate off of the old cluster onto a standalone server and then migrate from the standalone server to the new cluster. We used the procedures covered here and here as the basis for the checklist that we followed. We did this across 5 management groups and in all cases the process wnet smoothly. In the process of doing our post QC however we noticed the following symptoms in two of our management groups:

- The RMS functioned perfectly on one of the nodes, but if it the role was failed over to the second node the role would go grey in the operations console

- When we opened the "Administration -> Device Management -> Agentless Managed" view and looked for the entry for the clustered root management servers network name, we only saw one of the nodes of the cluster listed there under "Monitored By"

We weren't sure how we got into this situation and since the RMS was running on one node, we didn't prioritize this extremely high but we did want to get to having a fully clustered RMS. So we came up with the following steps to get the RMS cluster back into working order (assumes \\NODE2 is the maulfunctioning node):

- Take a current backup of the OpsDB

- Run /RemoveRMSNode to get rid of \\NODE2 from the RMS cluster

- Confirm the one-node RMS on \\NODE1 is healthy

- Remove all traces of OpsMgr from now removed node (\\NODE2)

- Reboot \\NODE2

- Re-install OpsMgr 2007 R2 with the latest CU on \\NODE2 just as it should be prior to adding the node into an RMS cluster

- Reboot \\NODE2

- Run /AddRMsNode to add \\NODE2 back into the RMS cluster

- Failover the RMS role from \\NODE1 to \\NODE2

- Ensure the clustered role functions as expected

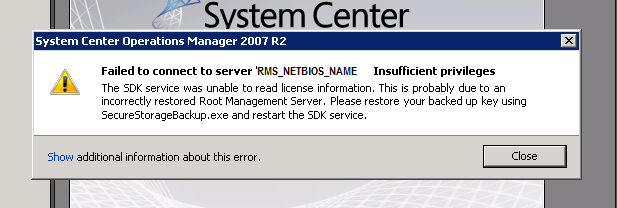

We reviewed this as a team and after we agreed on the approach we set to doing the work. All was going smoothly until we got to step 10. We attempted to connect the Operations Console to the clustered RMS after failover over to \\NODE2 and we got a dialog (shown below) that said the following:

Heading: Failed to connect to server <RMS_NETBIOS_NAME>. Insufficient privileges

Detail: The SDK service was unable to read license information. This is probably due to an incorrectly restored Root Management Server. Please restore your backed up key using SecureStorageBackup.exe and restart the SDK service.

Now what?

OK, no biggie. We must've just grabbed the wrong key when we did the restore, right? Well we found the key and tried restoring, but to no avail. Then we made a big mistake and restored that same key to \\NODE1 (the node that had been working all along). We then failed over and sure enough the console was broken. UGH! It was at this point that we started to worry that we were going to have to rebuild the MG. Fortunately for us we came across the blog post by J.C. Hornbeck on the SMS&MOM blog, which enlighted us to a better way that has in face existed since SP1! So we went to our installation media and copied the MOM.msi over to the \\NODE1, opened an administrator command prompt and ran the following:

msiexec.exe /i D:\temp\MOM.msi CREATE_NEWKEY=1

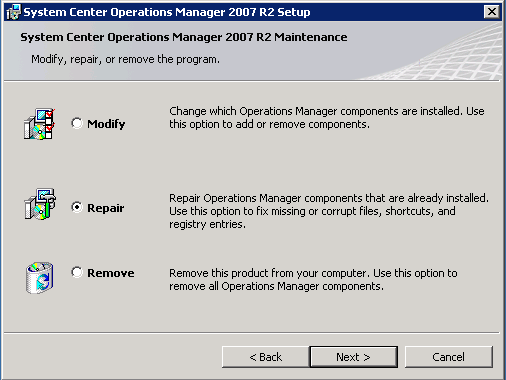

This is where we encountered the first topic not covered in the available documentation. When we ran the MSI we were presented with the dialog below that asked us whether we wanted to "Modify", 'Repair" or "Remove". We ultimately picked "Repair", and the rest of the MSI ran as expected, but it would've been much more reassuring to have that called out explicitly in the KB article or something.

This is where we encountered the second topic not covered in the available documentation. So once the installer completed we flipped over to the service control manager to ensure the RMS services were all running and we found that MOM.msi had set our clustered services on the local node back to "Automatic" start mode. We switched them over to "Manual" so that the cluster can manage their state as it sees fit. We then opened our Operations Console back up and with fingers crossed we watched it linger on the load-up screen for what felt like a dooming pregnant pause, but then it loaded up without issue. We all were relieved but continued on with our post-migration verifications.

This is where we encountered the third and final topic not covered in the available documentation. Skipping over the details of how we came to be looking in the "Operations Manager Administrators" user role, we found its membership had been reset down to just one member, which in turn was breaking our ticketing connector. We restored the members of that user role and then everything appeared to be functioning well on the one node. We backed up the encryption key, restored it over to the other node, failed over the RMS and confirmed everything was in working order. PHEW!

In summary and for the tl;dr crowd

- Next time you find yourself without an encyption key, repair the RMS with the /CREATE_NEWKEY=1 parameter passed to MOM.msi

- I don't speak on behalf of the OpsMgr PG or CSS when I say this, but for us, this worked on a clustered RMS. We ran it on node 1. We set the services on node 1 back to manual, and then backed up and restored the encryption key to node 2

- If you do this and it works, take a close look at your management group's "Operations Manager Administrators" user role and make sure that it's membership is populated as you would expect