Are big data and the hybrid cloud mutually exclusive?

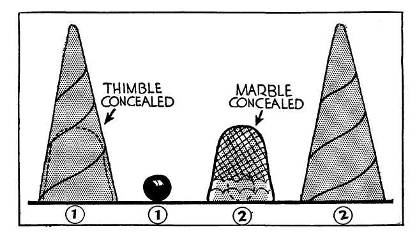

Technology marketing can resemble a shell game where customers are left to guess what's going on until a vendor releases products or services that can be acquired and used. Even then, there is still a lot of guesswork on the part of the consumer as product directions and roadmaps are often opaque. There are many good business reasons why technology vendors are inclined not to disclose what they are working on, including the fact that sometimes plans don't work as expected. Customers and vendors alike are better off with solutions that are available and work, as opposed to the next big thing that does neither.

But, nobody in technology ever wants to be uninformed, especially where the major trends are concerned. Vendors feel the need to be trend-setters and to dictate what the trends are. That's how we define leadership in our industry - the ability to envision technology's future, to articulate that vision and then execute the vision with products and services. Sometimes the magic works and sometimes it doesn't.

So there is a schism between practical business based on things that work and future business based on yet unfulfilled vision. Sometimes there are separate technology threads that are so broad there is nothing that can keep them from colliding. That is the case today with cloud technology - specifically hybrid cloud - and big data. Hybrid cloud is all about balancing resource costs, computing capabilities and management between on-premises data centers and cloud data centers. Everything we know about running data centers is part of the vision for hybrid cloud and there will likely be unexpected efficiencies to be gained and problems to overcome as this technology unfolds.

Big data is all about making better use of information - particularly the relationships between different data. One of the confusing elements of big data is that it has two fundamentally different branches - the first where the relationships between data are unknown and unpredictable and are discovered through a process that is akin to information refining - and the other where the relationships between data are either known or predictable and can be programmed specifically to achieve an instantaneous result. The unpredictable/unknown/refining stuff is part of the discussion surrounding Hadoop and map reduce algorithms. The predictable/programmable/instantaneous stuff is part of the discussion surrounding in-memory database (IMDB) technology.

If you look at the world like I do - from a storage perspective - this is all a bit puzzling. Hybrid cloud computing requires a way to make data portable between the on-premises data center and the cloud. Data portability is mostly seen today as moving VMDKs or VHDs for virtual machines between earth and sky. Depending on the size of the data sets involved and the bandwidth available, this could take a short time or a long time. There will undoubtedly be some very interesting technologies developed in the next several years that narrow the time gap between on-premises and cloud production services. The size of the data sets in big data is in some ways the worst case scenario for hybrid cloud. There is lots of data in one place that has to be moved through a cloud connection to be able to be processed in another.

The real-time needs of the predictable/programmable/instantaneous type of big data makes it seem very unlikely that hybrid cloud data portability will ever be possible. If you need real-time access to data, can you afford to wait days, hours or even weeks for a full data upload? In contrast, the unpredictable/unknown/refining type of big data can very likely be fulfilled by hybrid cloud data portability. After all, if you are going to be refining data over the course of days, weeks or even months, then data synchronization latencies are mostly insignificant.

Then there is the whole problem of disaster protection and backup for big data. If the business becomes dependent on big data processes - especially the predictable/programmable/instantaneous type, there has to be a way of making it work again when humpty dumpty has a great fall. This is where the brute force analysis of hybrid data portability falls apart - there will obviously be delta-based replication technology of some sort that incrementally updates data to a cloud recovery repository. Customers are clearly looking for this sort of hybrid cloud data protection for their data - and will want it for big data too.

We have a scenario today that is ripe for hype - and technology vendors are creating positions they hope will influence the market to not only buy products, but to design architectures that will dictate future purchases for many years. There is a great deal at stake for vendors and customers alike.