VMware or Microsoft–Replaceable? or Extensible? What kind of virtual switch do you want?

There has been a fair amount of press in the last few years regarding the virtual networking capabilities on different hypervisor platforms. With the general availability release of Windows Server 2012 R2 and Windows Hyper-V Server 2012 R2 taking place in just over 5 weeks (October 18th to be exact), I think it is time to re-focus on what we have to offer with out networking capabilities compared to VMware offerings. Let’s briefly look at the VMware offerings for virtual switching.

vSphere Standard Switch – This is your basic virtual switch. This is somewhat comparable to the Hyper-V Virtual Switch in Windows Server 2012 (so long as no extensions have been added to the Hyper-V Virtual Switch)

vSphere Distributed Switch – Provides a centralized interface for managing virtual networking across multiple hosts. In a large datacenter, this simplifies the management of virtual networking across numerous VMware hosts. But it is only available in vSphere Enterprise Plus.

3rd party switch “extensions” – VMware supports the Cisco Nexus 1000V and the IBM 5000V virtual switches, but they also require vSphere Enterprise Plus. Additionally, these are not extensions but really replacements for the vSphere Virtual Switch. More telling is that after all this time in market, there are only two offerings for VMware.

Eric Seibert’s Article from 2011 - Why’s the Nexus 1000V the only third-party distributed virtual switch? – points out “It's been almost three years since the release of the Cisco Nexus 1000V, and it is still the only third-party virtual switch for vSphere.” Of course that was over 2.5 years ago, but even now, after almost 6 years, there are only those two options.

The Hyper-V (truly!) Extensible Switch is available in all iterations of the 2012 editions of Hyper-V. It also supports the Cisco Nexus 1000V as well as 3 additional switch extension offerings including NEC’s OpenFlow extension, 5Nine’s firewall extension, and InMon’s sFlow monitoring extension. All within 1 year of GA of Windows Server 2012/Hyper-V Server 2012. Thank you partners! We also have a very well documented set of API’s that allow you to develop your own extensions to add your own capabilities (see the MSDN article links near the end of this post).

But enough of that. Given that the Hyper-V Extensible Switch is relatively new, How about let’s look at some of the things it offers.

==========================

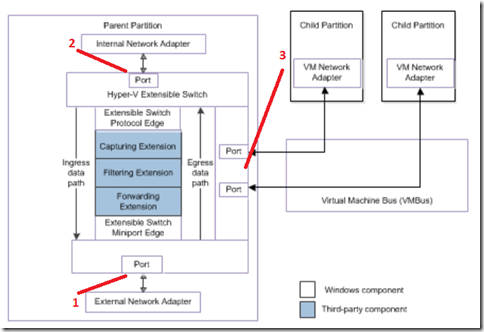

In the Hyper-V we shipped with Windows Server 2012 and Windows Hyper-V Server 2012 we use an extensible switch architecture that allows you to install 3rd party extensions or even write your own. There are 3 different types of switch extensions we enable in this fashion -

Capturing Extensions – This extension type is used to capture and monitor packets. It cannot drop packets (see filtering extension), but they can initiate packets that contain network monitoring data such as statistics that may be used in an application.

There can be multiple Capturing Extensions loaded and even ordered as needed

Filtering Extensions – Can also capture and monitor packets, but they also can drop or deny packets based on port and switch policies defined by administrators. Filtering extensions can also originate, duplicate, or clone packets and inject them into the extensible switch data path. For instance, a filtering extension could be used for packet shaping and QOS functions.

There can be multiple Filtering Extensions loaded and even ordered as needed

Forwarding Extensions - These extensions have the same capabilities as filtering extensions, but are responsible for performing the core packet forwarding and filtering tasks of extensible switches including setting/excluding the destination port for a packet and enforcing standard port policies, such as security, profile, or virtual LAN 44MS!(VLAN) policies

There can be only one (Highlander syndrome!) Forwarding Extension bound and enabled to an instance of a virtual switch

To understand a little better what happens with traffic that flows through a virtual switch with extensions, have a look at the diagram below -

Each Hyper-V Extensible Switch has a variety of ports attached to it -

1 – We can only have one instance of a virtual switch bound to a physical NIC without using a “bonding driver”

2 – The Parent Partition (either Window Server or Windows Hyper-V Server) is bound to a port

3 – Each child partition (virtual machine) will have one of more virtual NICs each bound to a port on the virtual switch

All packet traffic that arrives at the extensible switch from its ports follows the same path through the extensible switch driver stack. For example, packet traffic received from the external network adapter connection or sent from a virtual machine (VM) network adapter connection moves through the same data path.

Packets arrive at the extensible switch from network adapters that are connected to the switch ports.

The protocol edge of the extensible switch prepares the packets for the ingress data path.

Extensions can then obtain a packet from the ingress data path and perform monitoring, inspection and routing functions based on policy.

When the packet arrives at the underlying miniport edge of the extensible switch, the miniport edge applies the built-in extensible switch policies to the packet. These policies include access control lists (ACLs) and quality of service (QoS) properties.

If the packet is not dropped because of these policies, the miniport edge originates a receive indication for the packet that is forwarded up the extensible switch data path. This is known as the extensible switch egress data path.

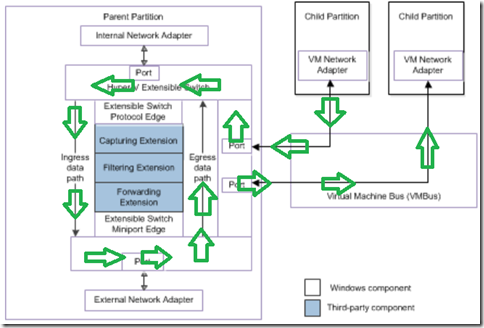

In our example below, a packet is traveling this path from a guest virtual machine to another guest virtual machine connected to the same switch.

Note that a packet will always travel in ingress and egress paths. In our example above, we could have an Outbound ACL applied to the packet by the filtering extension and an Inbound ACL applied on the way back through the stack. Also, we can have multiple Capturing and Filtering Extensions loaded at the same time and they can be re-ordered based on the order in which you want the extensions to look at and impact the packets.

Beyond the extensions themselves, we are adding some new enhancements to the Hyper-V Extensible Switch in the R2 timeframe.

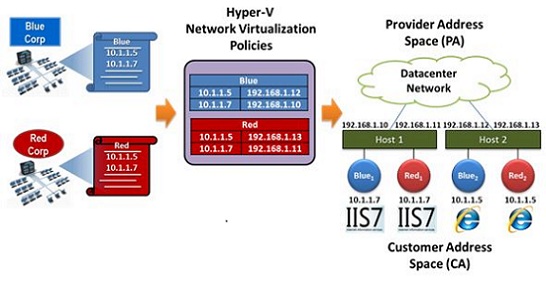

Hyper-V Network Virtualization - an encapsulation technology that enables datacenters to use a flat network fabric that can be configured to have multiple isolated tenant virtual networks on the same physical network. This allows service providers to reconfigure their network infrastructure without reconfiguring physical equipment or cables.

Network virtualization extends the concept of server virtualization to apply to entire networks. With network virtualization, each physical network is converted to a virtual network, which can now run on top of a common physical network. Each virtual network has the illusion that it is running on a dedicated network, even though all resources—IP addresses, switching, and routing—are actually shared.

HNV will let you keep your current IP Address scheme while migrating entire virtual networks in your organization or even to a multi-tenant cloud - even if VMs use the exact same IP addresses!

This is accomplished by giving each VM two IP addresses. One IP address visible in the VM, which is relevant in the context of a given tenant’s virtual subnet, called a Customer Address (CA). The second IP address, which is relevant in the context of the physical network in the cloud datacenter. This is called the Provider Address (PA).

Imagine Red VM having IP address 10.1.1.7 and Blue VM having 10.1.1.7 as shown above. In this example the 10.1.1.7 IP addresses are CA IP addresses. By assigning these VMs different PA IP addresses (e.g. Blue PA = 192.168.1.10 and Red PA = 192.168.1.11) there is no routing ambiguity. Via policy we restrict the Red VMs to interact only with other Red VMs and similarly Blue VMs are isolated to the Blue virtual network. The Red VM and the Blue VM, each having a CA of 10.1.1.7, can safely coexist on the same Hyper-V virtual switch and in the same cloud datacenter.

There are several other enhanced networking features that are a part of Hyper-V -

Single Root I/O Virtualization (SR-IOV) -

Enable multiple virtual machines (VMs) to share the same PCIe physical hardware resources.

I/O overhead in the software emulation layer is diminished and achieves network performance that is nearly the same performance as in nonvirtualized environments.

Improves network throughput by distributing processing of network traffic for multiple virtual machines (VMs) among multiple processors.

Reduces CPU utilization by offloading receive packet filtering to network adapter hardware.

Prevents network data copy by using DMA to transfer data directly to VM memory.

Supports live migration. For more information about live migration, see NDIS VMQ Live Migration Support.

==========================

We are only 5 weeks away from general availability of Windows Server 2012 R2 and Windows Hyper-V Server 2012 R2! Now is a great time to start testing the Preview Release in a lab environment!

Additional Resources -

TechNet – Hyper-V Virtual Switch Technical Overview

MSDN – Overview of Hyper-V Extensible Switch

MSDN – Hyper-V Extensible Switch Components

MSDN - Write your own Hyper-V Extensible Switch Extension

Blog - Hyper-V Extensible Switch Enhancements in Windows Server 2012 R2

-Cheers!