SCVMM & Failover Cluster Host Fails with Error 10429 – Older version of VMM Server installed

Recently, I had the unfortunate task of moving the System Center VMM Database off of a SQL cluster over to a stand-alone version of SQL 2008 SP1. This sounds extremely simple though it is unfortunate that it isn’t quite as simple as it possibly could be. In this blog, I thought I would share my experience in case anyone runs into a similar issue. I’m happy to report, though, that the steps below cleared the issue and all systems are working as desired.

Setup

- 7-node Failover Cluster using Windows Server 2008 R2

- 2 Clustered Share Volumes (CSVs) attached to cluster

- SCVMM 2008 R2

How to migrate VMM Database

The first step is to detach/offline the Virtual Machine Managers’ database VirtualManager. This is easily accomplished using SQL Management Studio and outlined in the following MSDN article. Once this is done, you can copy the database to a shared location where your target host can access it. Copy the MDF & LDF files from the shared location to your new database server. Import the database.

To recap, the steps are:

- Backup your VMM Database

- Detach your Database

- Copy to a “Shared” location

- Import the database (and log)

This is where it gets a bit “nastier” than it has to be in my opinion. You are now required to uninstall SCVMM and select the “Retain Data” option. Yes, I said uninstall. You will now re-install VMM on the server (which you just removed it from) and on the database selection screen use existing database. Do not select to “Create New Database” as this is for a new install.

This process, albeit much more complicated than I believe it has to be worked with no problems during this process.

NOTE: There is a registry key that “supposedly” one can set without this hammer of sorts. If this works, let me know or next time I will try it and see what happens.

Hosts Need Attention – Update Agent

This is where the fun began, for me at least. I re-installed the server with no problem and then I opened the VMM Administration console. Upon opening the console, I was immediately shared with no host happy with anything. They are all in a “Needs Attention” status. If you right-click on the host, you notice that you can now select Update Agent. For 6 out of the 7 hosts, this process worked flawlessly with no work at all. Then there was the 7th…

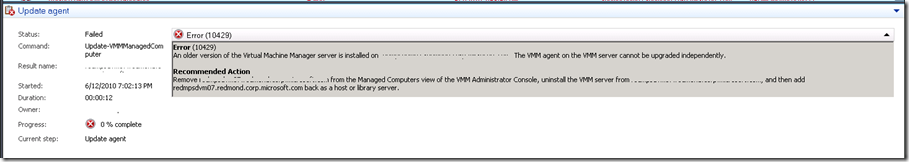

Error 10429: Older version of the VMM server is installed on {Server FQDN} | {IP}

On the seventh server, the VMM server failed each and every time with an error of 10429 stating the VMM server is an older version. This is funny, for me, because this is the exact same build I had installed previously. Nonetheless, the server was simply un-willing to cooperate and continued this odd behavior of being “unmanageable.” The host stayed in a non-responsive state.

The error message returned by the VMM job in the “Recommended Action” was unfortunately not helpful or “actionable.” The recommended action to remove the physical host, manually install the VMM agent wasn’t possible. The reasons are the following:

- The “Remove Host” UI or Command-let was unavailable

- Attempting to install the VMM Agent on the host returned an error stating that VMM agent couldn’t be installed manually

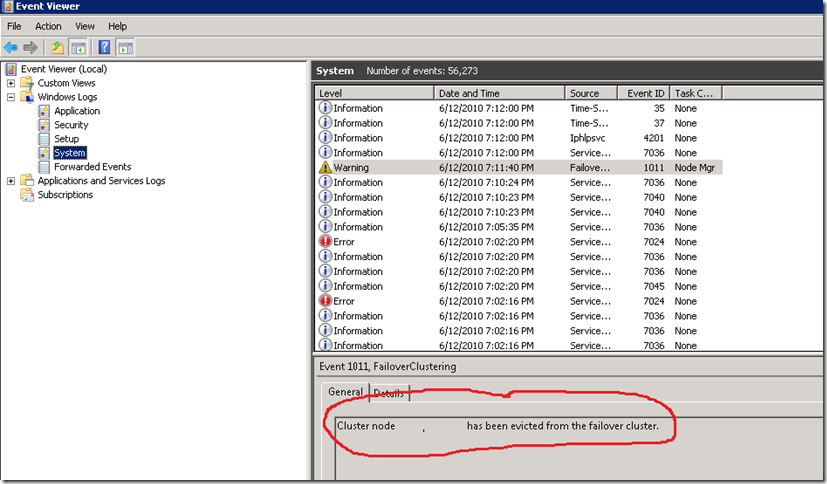

Wow… this is ugly. I can’t manage the server though in Failover Cluster all looks good and the guest VM’s are running fine. Stretching the brain…In the event viewer on the host, you see the following -

Error 6/12/2010 7:02:20 PM Service Control Manager 7024 None

Information 6/12/2010 7:02:20 PM Service Control Manager 7036 None

Information 6/12/2010 7:02:20 PM Service Control Manager 7036 None

Information 6/12/2010 7:02:20 PM Service Control Manager 7045 None

The information messages are just telling you it is attempting to install VMM while the error message description says the following:

The VMMAgentInstaller service terminated with service-specific error %%-2146041839.

Fix It: Let’s just get this thing back to working order and worry about the cause later…

For many of you, you will be very much like I was at the moment this problem is occurring. The service points (VM’s) are online and happy but the physical host isn’t in a manageable state at the current moment. You don’t care why nor do you want to spend a significant amount of time gathering the debug logs for VMM – you just want it working as it was prior to you initiating the migration of the database. There isn’t any more frustrating problem than troubleshooting something that was avoidable such as moving a database that is happy.

Let’s just fix this thing…

NOTE: Doing the following in production isn’t suggested without taking all precautions such as taking backups, etc. and doing the typical “change management” process your company has in place. I hope you don’t hold me responsible as I will plead the 5th and I will share that this is the real world – you want it fixed. Period. All lawyers have *not* approved this message.

- On your workstation or the physical host, open Failover Cluster Manager

- Locate the host that is unmanageable, right-click on it and select Live Migrate (or Quick Migrate) and select the target host

- Repeat step 2 until all VM guests are off the server

- When all VMs are off the physical host, right-click on the node in Failover Cluster Manager and select More Actions, Evict

- After the eviction, verify all services are running fine in the Cluster (See Below)

- Open VMM Administrator console, and locate the evicted non-clustered physical host (you might need to refresh)

- Right-click on the host, select Remove Host (yes, this isn’t unavailable any longer)

- In the Failover Cluster Manager, right-click on the cluster and select Add Node, enter the physical hosts name that was evicted

- Validate all tests & add back to the cluster

- In the VMM Administrator console, refresh the cluster

- Right-click, and select to add the node to the cluster after completion

- Open VMM Administrator console, and locate the evicted non-clustered physical host (you might need to refresh)

- Right-click on the host, select Remove Host (yes, this isn’t unavailable any longer)

- In the Failover Cluster Manager, right-click on the cluster and select Add Node, enter the physical hosts name that was evicted

- Validate all tests & add back to the cluster

- In the VMM Administrator console, refresh the cluster

- Right-click, and select to add the node to the cluster after completion

After 11 steps, your server is now manageable and ready. Go ahead and Live Migrate your guest VM back to the server and verify service for those guests.

Summary

This isn’t the cleanest scenario by any stretch of the imagination and it is much more of a hammer than anything I like. However, you effectively have a server that is unmanageable but all services are happy. This isn’t satisfactory and the situation needs fixed. These steps led me back to the promise land and should do the same for you…just be patient. It is required. In this blog post, you got educated in how to migrate VMM database to another server, watch one host get upset with this decision, and how you can prevail and still show that host who is in charge.

Enjoy!

Thanks,

-Chris