Hyper-V 2012 R2 Network Architectures Series (Part 6 of 7) – Converged Network using CNAs

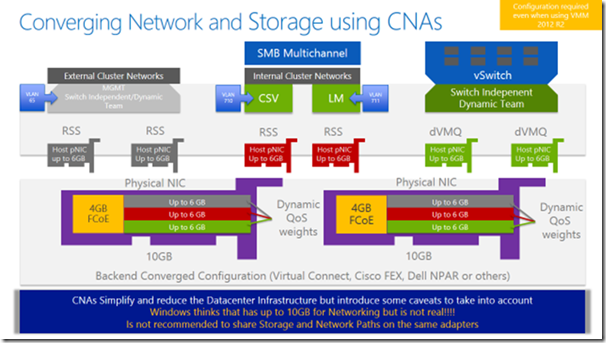

The last Hyper-V Network Architecture that I want to discuss is when the hardware combines the Ethernet network with the FC Storage connection over Ethernet using Converged Network Adapters (CNAs). The main purposes of this type of adapters is to reduce hardware costs and space on the datacenter. Let’s take a look to the diagram and see the key components of this solution:

Again, from bottom to top, there are two big purple Uplinks representing the CNA adapters. As you can see, this time the same uplinks must be shared not only between the different Hyper-V network’s traffic, but also with the Storage IO. This configuration and partition of the Uplinks is done by the backend software and a common setup is to dedicate 4GB from each Uplink for Storage. Windows will see these two adapters as HBAs and you will have to setup MPIO and the vendor DSM to provide HA.

As you can imagine, we suddenly sliced the total amount of bandwidth available for Ethernet to 6GB on each uplink, and here is exactly where we need to start planning accurately our Hyper-V Network Architecture.

- Windows will see the Multiplexed Ethernet adapters as 10GB cards. Of course this is not true at all because 4 of the 10GB are already blocked and dedicated exclusively for Storage IO. This shouldn’t be a big issue if you plan accordingly, but I’ve seen some customers complaining about network throughput without taking this factor into account.

- You might want to consider the number of multiplexed Ethernet NICs presented to the Hyper-V host. We have less available bandwidth for Ethernet and this may change your approach and the number of NICs.

- By definition, it is not the best approach to use the same path to send Storage and Network IOs. We are not isolating such traffic and this may lead to some bottlenecks if we don’t have a good capacity plan and performance baseline of our hardware. Personally I don’t like to put all my eggs in the same basket unless it is really necessary.

This is the last Hyper-V Network Architecture that I wanted to discuss and in my next post I will do a quick summary with the Pros and Cons from each solution. You may have noticed that I’ve never considered SR-IOV or RDMA NICs in any of the scenarios, but the main reason was to simplify the pictures.

Ideally, RDMA NICs should be completely dedicated and unless you use a Non-Converged Network approach, the backend solution does not provide RDMA support today. This probably will change in the near future. Until then RDMA NICs can be considered as FC HBAs and that’s why I didn’t include them in any scenario.

Regarding SR-IOV NICs, some vendors expose the SR-IOV VF on the Multiplexed cards presented to Windows. When this is the case, you will have to evaluate if you want to dedicate one or two of these multiplexed cards for this purpose. On the other hand, for the Non-Converged Scenarios, you will probably have to buy some extra adapters to provide this functionality if your environment or Workload requires it.