Hyper-V: Clustering & Resiliency. Step 1: Storage

Hello Folks,

This is the 2nd post in our Hyper-V 2012 R2 from the ground up series. Earlier this week we covered setting Host Configuration using SCVMM 2012 R2.

Today let look at Virtual Machine Clustering & Resiliency. As usual I encourage you to download Windows Server 2012 R2 or Hyper-V Server 2012 R2, setup your own lab and try these scenarios for yourself. Again, to the risk or repeating myself we have some wonderful content on Microsoft Virtual Academy that is sure to educate us all on the capabilities and strength of the Hyper-V platform.

- Windows Server 2012 Jump Start: Preparing for the Datacenter Evolution

- Introduction to Hyper-V Jump Start

- Windows Server 2012 R2 Virtualization

- Server Virtualization with Windows Server Hyper-V and System Center

That being said, let’s move on to Virtual Machine Clustering & Resiliency.

When we talk about high availability or Failover clustering for hyper-v it’s important to realize that we support massive scalability with support for 64 physical nodes & 8,000 VMs per clusters. but as we mentioned before this is from the ground up. So, let’s quickly review what is a cluster.

A failover cluster is a group of computers that work together to increase the availability and scalability of clustered roles. The clustered servers (called nodes) are connected by physical cables and by software. If one or more of the cluster nodes fail, other nodes begin to provide service (a process known as failover). The clustered roles are proactively monitored to verify that they are working properly. If they are not working, they are restarted or moved to another node. Failover clusters also provide Cluster Shared Volume (CSV) functionality that provides a consistent, distributed namespace that clustered roles can use to access shared storage from all nodes.

From a virtualization perspective, the Failover Cluster provides the VM with high availability. If a physical host were to fail, the virtual machines running on that host would also go down. This would be a disruptive shutdown, and would incur VM downtime. However, as that physical node was part of a cluster, the remaining cluster nodes would coordinate the restoration of those downed VMs, starting them up again, quickly, on other available nodes in the cluster. This is automatic, and without IT admin intervention.

With Windows Server 2012 and subsequently Windows Server 2012 R2, Failover Clustering now supports the VMs being placed on a SMB 3.0 file share, this provides administrators with considerably more flexibility when deploying their infrastructure, and also allows for a simpler deployment and management experience.

In this post we will prepare the storage to allow us to deploy a Hyper-V Cluster. This is from the ground up. Right? so we need to go at it one step at a time

Enough chit chat!

let’s prepare a Hyper-V cluster. We use System Center 2012 R2 Virtual Machine Manager to manage our file server and SMB shares which can be used to store Hyper-V VMs.

Add our FS01 file server to SCVMM

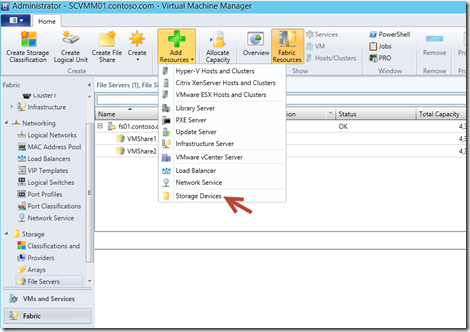

1. In the SCVMM Console, Select the Fabric workspace, On the navigation pane, expand Storage and click File Servers. On the ribbon, click Add Resources, and then click Storage Devices.

2. On the Select Provider Type page, select Windows-based file server, and then click Next.

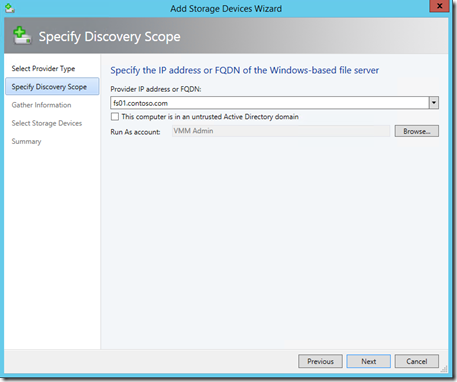

3. On the Specify Discovery Scope page, in the Provider IP address or FQDN field, type

FS01.contoso.com, and leave the This computer is in an untrusted Active Directory domain box

unchecked. Create and use a “Run As Account” with domain admin rights. In our case we created “VMM Admin” with the following properties:

- Name: VMM Admin

- User Name: Contoso\administrator

4. After returning to the Specify Discovery Scope page, click Next. SCVMM will automatically scan FS01 and return the results below. On the Gather Information page, review the results, and then click Next.

5. On the Select Storage Devices page ensure that FS01 is selected and click Next.

6. On the Summary page, click Finish.

Create the File shares to be used by the cluster

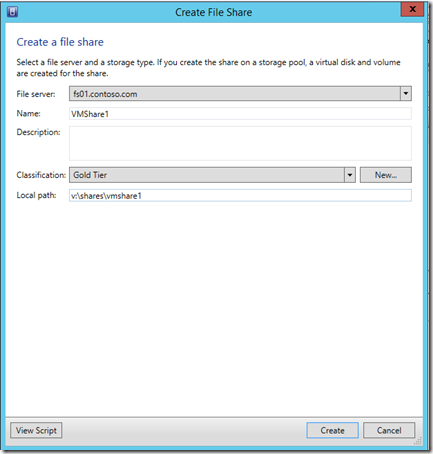

1. On the Fabric navigation pane, select Providers and Select FS01.contoso.com and, on the ribbon, click Create File Share.

2. In the Name field, type in VMShare1, next to the Classification dropdown, click New and in the New Classification window, type Gold Tier in the Name field, and then click Add. and finaly, in the Local path field, type V:\Shares\VMShare1 and Click Create.

3. Repeat steps with the following information:

- Name: VMShare2

- New Classification Name: Silver Tier

- Local Path: V:\Shares\VMShare2

Add a Storage Area Network to SCVMM for our CSV.

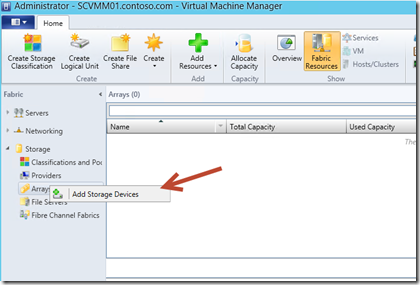

1. In the SCVMM Console, select the Fabric workspace, expand Storage, right-click Arrays and select Add Storage Devices.

2. On the Select Provider Type page, select SAN and NAS devices discovered and managed by a

SMI-S provider, and click then Next.

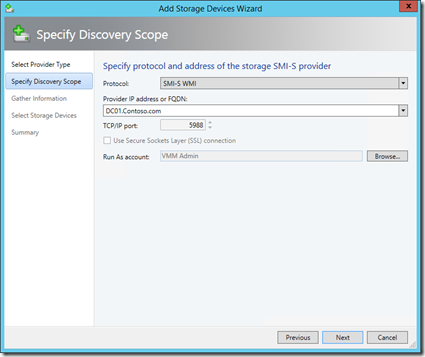

3. On the Specify Discovery Scope page, in the Protocol dropdown select SMI-S WMI, and in the

Provider IP address or FQDN field, type DC01.contoso.com, use VMM Admin as the Run As Account and click Next.

4. Discovery against the target will now take place. On the Gather Information page, select DC01

when discovery has completed, and then click Next.

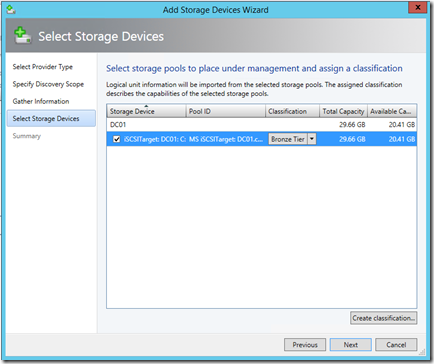

5. On the Select Storage Devices page, click Create classification, in the Name field, type Bronze Tier, and then click Add.

6. Back on the Select Storage Devices page, select the box next to iSCSITarget: DC01: C:, then select

Bronze Tier from the Classification dropdown, and then click Next. and on the Summary page click Finish.

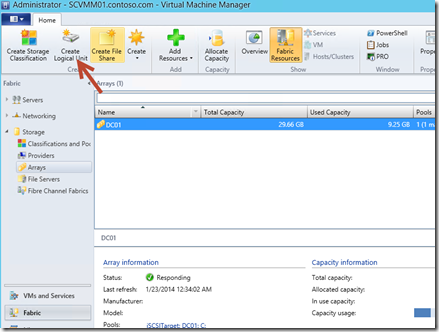

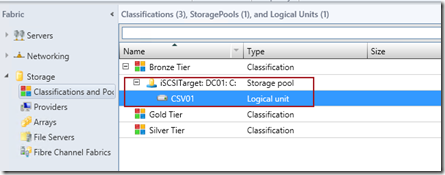

7. On the navigation pane, under Storage, select Arrays, in the detail pane, select DC01 and on the ribbon, click Create Logical Unit.

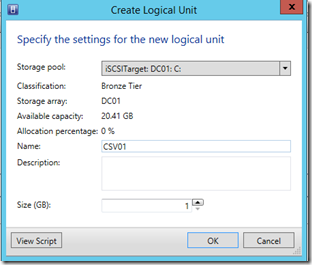

8. In the Create Logical Unit window, leave the default Storage Pool, in the Name field, type CSV01,

leave the default 1GB size, and then click OK.

and that is it. our Clustered Shared Volume is defined in SCVMM

Next Tuesday, we will configure the NIC Teams, the Logical and virtual switches. we will be one step closer to have a fully functional and highly available Hyper-V cluster.

In the mean time, keep learning.

Cheers!

Pierre Roman | Technology Evangelist

Twitter | Facebook | LinkedIn