HPC in the real world

A while ago I posted a couple of entries related to High Performance Computing (aka HPC). You can read them here (Part 1, Part 2, Part 3) but I wanted to share a real world example of how HPC is being used and how it impacts the mining industry, as well as our lives.

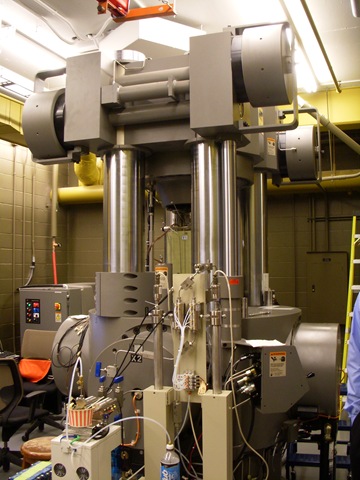

A few months ago I got the chance to visit with Paul Ruppert director of strategic research systems for the department of civil engineering at the University of Toronto and tour the University of Toronto’s Lassonde Institute and look at how they are using HPC to make life safer. In 2007 they opened the Rock Fracture Dynamics Laboratory, the first of its kind in the world. The purpose of the lab is to crush rocks; well more accurately they "perform stress tests on rock samples and then use the data collected to perform simulations and get real-time information on the effects of the different types of stress" the "rock crusher" can inflict.

This data, once processed, is used to build models and simulations to enhance mine safety. When mines are dug, the removal of earth puts pressure on the surrounding earth. Too much pressure and the mine can collapse. By studying the stress levels that the materials that make up the earth surrounding the mine and knowing their "breaking point" mines can be dug in a much safer manner.

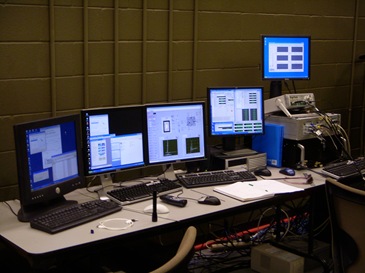

The tool can exert pressure from multiple angles simultaneously as well as heat/cool the material being tested. All the data is streamed at around 400MB per second to a large 18.4 TB SAN where the data is then processed by the cluster. The cluster is built from 64 Quad Core (256 total cores) x64 Dell servers with a total of 320GB of RAM and runs Windows 2003 Compute Cluster Server operating system.

Once the data is collected it is processed on the cluster and then simulations can be run. With 256 cores they can submit 256 models and run 256 processing streams at the same time with the same code. More models means more accuracy.

What is really interesting is that this entire 64 server Windows HPC cluster can dual boot. There are two head nodes to the cluster, one running Windows and one running a RedHat Linux and the rest of the 64 processing nodes can be booted into a Windows or Linux HPC cluster. I asked Paul and his staff why they would go through the added complexity of a dual boot system and part of the reason was backwards compatibility. They have already created a number of jobs and applications to run on the Linux cluster from the days when they used other Linux clusters in the U of T. This lead to my next question on if you were already running it on Linux clusters, why install a Windows cluster?

There were a few reasons with the big one being the tools that the students were using. The graduate students all develop their applications in C# and .NET using Visual Studio and then test their code on a multi core computer before running it on the cluster. Having the same tools on both the dev and production side obviously makes sense so they wanted to match what the students were already using and familiar with. The department was already running Windows and Active Directory so the integration into their existing environment was also key as was the ability to use the same management and monitoring tools that were already in place. One of the last reasons to why they went with a Windows cluster lies with visualizations. They found that the visualization capabilities on the Windows cluster were much better than on Linux clusters and allowed for much more detailed, high resolution models.

The use of this technology and these models goes far beyond just mining. Roads, highways, bridges, tunnels all are affected by pressures which cause stress on the materials they are made of. By understanding their structure, how they react to these pressures and how that reaction changes due to temperature will make the construction of these traffic ways we all use safer as well.