Top 10 Common Causes of Slow Replication with DFSR

Hi, Ned again. Today I’d like to talk about troubleshooting DFS Replication (i.e. the DFSR service included with Windows Server 2003 R2, not to be confused with the File Replication Service). Specifically, I’ll cover the most common causes of slow replication and what you can do about them.

Update: Make sure you also read this much newer post to avoid common mistakes that can lead to instability or poor performance: https://blogs.technet.com/b/askds/archive/2010/11/01/common-dfsr-configuration-mistakes-and-oversights.aspx

Let’s start with ‘slow’. This loaded word is largely a matter of perception. Maybe DFSR was once much faster and you see it degrading over time? Has it always been too slow for your needs and now you’ve just gotten fed up? What will you consider acceptable performance so that you know when you’ve gotten it fixed? There are some methods that we can use to quantify what ‘slow’ really means:

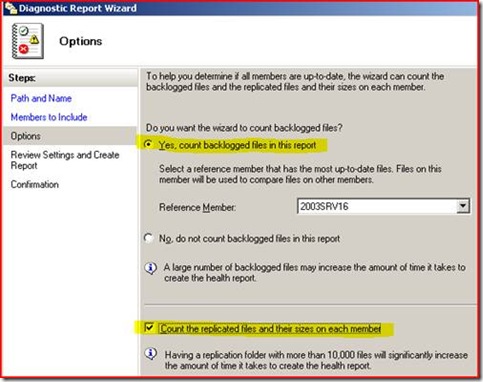

· DFSMGMT.MSC Health Reports

We can use the DFSR Diagnostic Reports to see how big the backlog is between servers and if that indicates a slowdown problem:

The generated report will tell you sending and receiving backlogs in an easy to read HTML format.

· DFSRDIAG.EXE BACKLOG command

If you’re into the command-line you can use the DFSRDIAG BACKLOG command (with options) to see how behind servers are in replication and if that indicates a slow down. Dfsrdiag is installed when you install DFSR on the server. So for example:

dfsrdiag backlog /rgname:slowrepro /rfname:slowrf /sendingmember:2003srv13 /receivingmember:2003srv17

Member <2003srv17> Backlog File Count: 10

Backlog File Names (first 10 files)

1. File name: UPDINI.EXE

2. File name: win2000

3. File name: setupcl.exe

4. File name: sysprep.exe

5. File name: sysprep.inf.pro

6. File name: sysprep.inf.srv

7. File name: sysprep_pro.cmd

8. File name: sysprep_srv.cmd

9. File name: win2003

10. File name: setupcl.exe

This command shows up to the first 100 file names, and also gives an accurate snapshot count. Running it a few times over an hour and give you some basic trends. Note that hotfix 925377 resolves an error you may receive when continuously querying backlog, although you may want to consider installing the more current DFSR.EXE hotfix which is 931685. Review the recommended hotfix list for more information.

· Performance Monitor with DFSR Counters enabled

DFSR updates the Perfmon counters on your R2 servers to include three new objects:

- DFS Replicated Folders

- DFS Replication Connections

- DFS Replication Service Volumes

Using these allows you to see historical and real-time statistics on your replication performance, including things like total files received, staging bytes cleaned up, and file installs retried – all useful in determining what true performance is as opposed to end user perception. Check out the Windows Server 2003 Technical Reference for plenty of detail on Perfmon and visit our sister AskPerf blog.

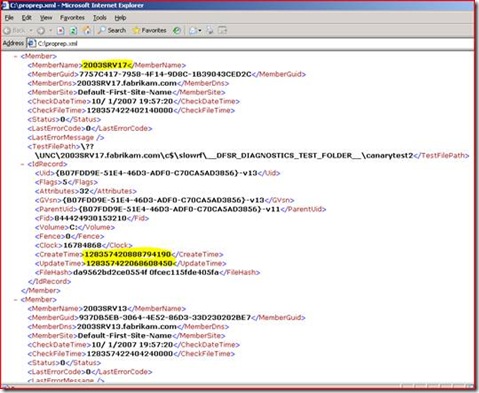

· DFSRDIAG.EXE PropagationTest and PropagationReport

By running DFSRDIAG.EXE you can create test files then measure their replication times in a very granular way. So for example, here I have three DFSR servers – 2003SRV13, 2003SRV16, and 2003SRV17. I can execute from a CMD line:

dfsrdiag propagationtest /rgname:slowrepro /rfname:slowrf /testfile:canarytest2

(wait a few minutes)

dfsrdiag propagationreport /rgname:slowrepro /rfname:slowrf /testfile:canarytest2

/reportfile:c:\proprep.xml

PROCESSING MEMBER 2003SRV17 [1 OUT OF 3]

PROCESSING MEMBER 2003SRV13 [2 OUT OF 3]

PROCESSING MEMBER 2003SRV16 [3 OUT OF 3]

Total number of members : 3

Number of disabled members : 0

Number of unsubscribed members : 0

Number of invalid AD member objects: 0

Test file access failures : 0

WMI access failures : 0

ID record search failures : 0

Test file mismatches : 0

Members with valid test file : 3

This generates an XML file with time stamps for when a file was created on 2003SRV13 and when it was replicated to the other two nodes.

The time stamp is in FILETIME format which we can convert with the W32tm tool included in Windows Server 2003.

<MemberName>2003srv17</MemberName>

<CreateTime>128357420888794190</CreateTime>

<UpdateTime>128357422068608450</UpdateTime>

w32tm /ntte 128357420888794190

148561 19:54:48.8794190 - 10/1/2007 3:54:48 PM (local time)

C:\>w32tm /ntte 128357422068608450

148561 19:56:46.8608450 - 10/1/2007 3:56:46 PM (local time)

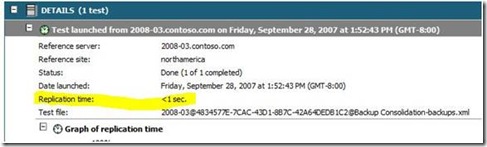

So around two minutes later our file showed up. Incidentally, this is something you can do in the GUI on Windows Server 2008 and it even gives you the replication time in a format designed for human beings!

Based on the above steps, let’s say we’re seeing a significant backlog and slower than expected replication of files. Let’s break down the most common causes as seen by MS Support:

1. Missing Windows Server 2003 Network QFE Hotfixes or Service Pack 2

Over the course of its lifetime there have been a few hotfixes for Windows Server 2003 that resolved intermittent issues with network connectivity. Those issues generally affected RPC and led to DFSR (which relies heavily on RPC) to be a casualty. To close these loops you can install KB938751 and KB922972 if you are on Service Pack 1 or 2. I highly recommend (in fact, I pretty much demand!) that you also install KB950224 to prevent a variety of DFSR issues - in fact, this hotfix should be on every Win2003 computer in your company.

2. Missing DFSR Service’s latest binary

The most recent version of DFSR.EXE always contains updates that not only fix bugs but also generally improve replication performance. We now have a KB article that we are keeping up to date with the latest files we recommend running for DFSR:

KB 958802 - List of currently available hotfixes for Distributed File System (DFS) technologies in Windows Server 2003 R2

KB 968429 - List of currently available hotfixes for Distributed File System (DFS) technologies in Windows Server 2008 and in Windows Server 2008 R2

3. Out-of-date Network Card and Storage drivers

You would never run Windows Server 2003 with no Service Packs and no security updates, right? So why run it without updated NIC and storage drivers? A large number of performance issues can be resolved by making sure that you keep your drivers current. Trust me when I say that vendors don’t release new binaries at heavy cost to themselves unless there’s a reason for them. Check your vendor web pages at least once a quarter and test test test.

Important note: If you are in the middle of an initial sync, you should not be rebooting your server! All of the above fixes will require reboots. Wait it out, or assume the risk that you may need to run through initial sync again.

4. DFSR Staging directory is too small for the amount of data being modified

DFSR lives and dies by its inbound/outbound Staging directory (stored under <your replicated folder>\dfsrprivate\staging in R2). By default, it has a 4GB elastic quota set that controls the size of files stored there for further replication. Why elastic? Because experience with FRS showed us having a hard-limit quota that prevented replication was A Bad Idea™.

Why is this quota so important? Because if Staging is below quota - 90% by default - it will replicate at the maximum rate of 9 files (5 outbound, 4 inbound) for the entire server. If the staging quota of a replicated folder is exceeded then depending on the number of files currently being replicated for that replicated folder, DFSR may end up slowing replication for the entire server until the staging quota of the replicated folder drops below the low water mark, which is computed by multiplying the staging quota by the low water mark in percent (default is 60%).

If the staging quota of a replicated folder is exceeded and the current number of inbound replicated files in progress for that replicated folder exceeds 3 (15 in Win2008) then one task is used by staging cleanup and the three (15 in Win2008) remaining tasks are waiting for staging cleanup to complete. Since there is a maximum of four (15 in Win2008) concurrent tasks, no further inbound replication can take place for the entire system.

If the staging quota of a replicated folder is exceeded and the current number of outbound replicated files in progress for that replicated folder exceeds 5 (16 in Win2008) then the RPC server cannot serve anymore RPC requests, the maximum number of RPC requests being processed at the same time being five (16 in Win2008) and all five (16 in Win2008) requests waiting for staging cleanup to complete.

You will see DFS replication 4202, 4204, 4206 and 4208 events about this activity and if happens often (multiple times per day) your quota is too small. See the section Optimize the staging folder quota and replication throughput in the Designing Distributed File Systems guidelines for tuning this correctly. You can change the quota using the DFSR Management MMC (dfsmgmt.msc). Select Replication in the left pane, then the Memberships tab in the right pane. Double-click a replicated folder and select the Advanced tab to view or change the Quota (in megabytes) setting. Your event will look like:

Event Type: Warning

Event Source: DFSR

Event Category: None

Event ID: 4202

Date: 10/1/2007

Time: 10:51:59 PM

User: N/A

Computer: 2003SRV17

Description:

The DFS Replication service has detected that the staging space in use for the

replicated folder at local path D:\Data\General is above the high watermark. The

service will attempt to delete the oldest staging files. Performance may be

affected.

Additional Information:

Staging Folder:

D:\Data\General\DfsrPrivate\Staging\ContentSet{9430D589-0BE2-400C-B39B-D0F2B6CC972E}

-{A84AAD19-3BE2-4932-B438-D770B54B8216}

Configured Size: 4096 MB

Space in Use: 3691 MB

High Watermark: 90%

Low Watermark: 60%

Replicated Folder Name: general

Replicated Folder ID: 9430D589-0BE2-400C-B39B-D0F2B6CC972E

Replication Group Name: General

Replication Group ID: 0FC153F9-CC91-47D0-94AD-65AA0FB6AB3D

Member ID: A84AAD19-3BE2-4932-B438-D770B54B8216

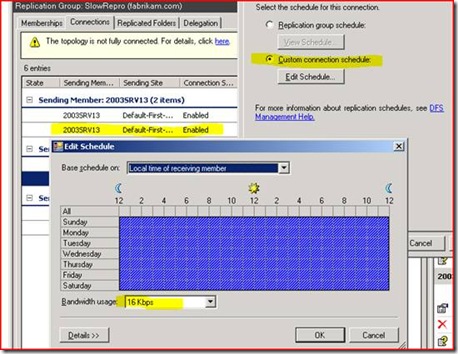

5. Bandwidth Throttling or Schedule windows are too aggressive

If your replication schedule on the Replication Group or the Connections is set to not replicate from 9-5, you can bet replication will appear slow! If you’ve artificially throttled the bandwidth to 16Kbps on a T3 line things will get pokey. You would be surprised at the number of cases we’ve gotten here where one administrator called about slow replication and it turned out that one of his colleagues had made this change and not told him. You can view and adjust these in DFSMGMT.MSC.

You can also use the Dfsradmin.exe tool to export the schedule to a text file from the command-line. Like Dfsrdiag.exe, Dfsradmin is installed when you install DFSR on a server.

Dfsradmin rg export sched /rgname:testrg /file:rgschedule.txt

You can also export the connection-specific schedules:

Dfsradmin conn export sched /rgname:testrg /sendmem:fabrikam\2003srv16 /recvmem:fabrikam\2003srv17

/file:connschedule.txt

The output is concise but can be un-intuitive. Each row represents a day of the week. Each column represents an hour in the day. A hex value (0-F) represents the bandwidth usage for each 15 min. interval in an hour. F =Full, E=256M, D=128M, C=64M, B=32M, A=16M, 9=8M, 8=4M, 7=2M, 6=1M, 5=512K, 4=256K, 3=128K, 2=64K, 1=16K, 0=No replication. The values are either in megabits per second (M) or kilobits per second (K).

And a bit more about throttling - DFS Replication does not perform bandwidth sensing. You can configure DFS Replication to use a limited amount of bandwidth on a per-connection basis, and DFS Replication can saturate the link for short periods of time. Also, the bandwidth throttling is not perfectly accurate though it maybe “close enough.” This is because we are trying to throttle bandwidth by throttling our RPC calls. Since DFSR is as high as you can get in the network stack, we are at the mercy of various buffers in lower levels of the stack, including RPC. The net result is that if one analyzes the raw network traffic, it will tend to be extremely ‘bursty’.

6. Large amounts of sharing violations

Sharing violations are a fact of life in a distributed network - users open files and gain exclusive WRITE locks in order to modify their data. Periodically those changes are written within NTFS by the application and the USN Change Journal is updated. DFSR Monitors that journal and will attempt to replicate the file, only to find that it cannot because the file is still open. This is a good thing – we wouldn’t want to replicate a file that’s still being modified, naturally.

With enough sharing violations though, DFSR can start spending more time retrying locked files than it does replicating unlocked ones, to the detriment of performance. If you see a considerable amount of DFS Replication event log entries for 4302 and 4304 like below, you may want to start examining how files are being used.

Event ID: 4302 Source DFSR Type Warning

Description

The DFS Replication service has been repeatedly prevented from replicating a file due to consistent sharing violations encountered on the file. A local sharing violation occurs when the service fails to receive an updated file because the local file is currently in use.

Additional Information:

File Path: <drive letter path to folder\subfolder>

Replicated Folder Root: <drive letter path to folder>

File ID: {<guid>}-v<version>

Replicated Folder Name: <folder>

Replicated Folder ID: <guid2>

Replication Group Name: <dfs path to folder>

Replication Group ID: <guid3>

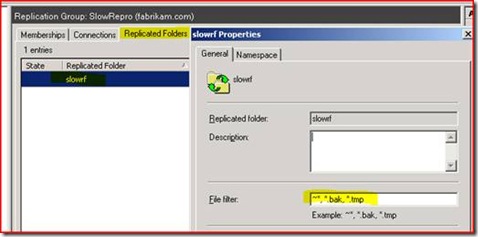

Member ID: <guid4>

Many applications can create a large number of spurious sharing violations, because they create temporary files that shouldn’t be replicated. If they have a predictable extension, you can prevent DFSR from trying to replicate them by setting and exception in DFSMGMT.MSC. The default file filter excludes file extensions ~* , *.bak, and *.tmp, so for example the Microsoft Office temporary files ( ~* ) are excluded by default.

Some applications will allow you to specify an alternate location for temporary and working files, or will simply follow the working path as specified in their shortcuts. But sometimes, this type of behavior may be unavoidable, and you will be forced to live with it or stop storing that type of data in a DFSR-replicated location. This is why our recommendation is that DFSR be used to store primarily static data, and not highly dynamic files like Roaming Profiles, Redirected Folders, Home Directories, and the like. This also helps with conflict resolution scenarios where the same or multiple users update files on two servers in between replication, and one set of changes is lost.

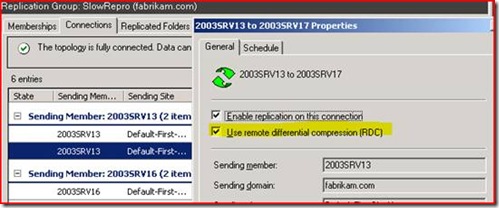

7. RDC has been disabled over a WAN link.

Remote Differential Compression is DFSR’s coolest feature – instead of replicating an entire file like FRS did, it replicates only the changed portions. This means your 20MB spreadsheet that had one row modified might only replicate a few KB over the wire. If you disable RDC though, changing any portion of a files data will cause the entire file to replicate, and if the connection is bandwidth-constrained this can lead to much slower performance. You can set this in DFSMGMT.MSC.

As a side note, in an extremely high bandwidth (Gigabit+) scenario where files are changed significantly, it may actually be faster to turn RDC off. Computing RDC signatures and staging that data is computationally expensive, and the CPU time needed to calculate everything may actually be slower than just moving the whole file in that scenario. You really need to test in your environment to see what works for you, using the PerfMon objects and counters included for DFSR.

8. Incompatible Anti-Virus software or other file system filter drivers

It’s a problem that goes back to FRS and Windows 2000 in 1999 – some anti-virus applications were simply not written with the concept of file replication in mind. If an AV product uses its own alternate data streams to store ‘this file is scanned and safe’ information, for example, it can cause that file to replicate out even though to an end-user it is completely unchanged. AV software may also quarantine or reanimate files so that older versions reappear and replicate out. Older open-file Backup solutions that don’t use VSS-compliant methods also have filter drivers that can cause this. When you have a few hundred thousand files doing this, replication can definitely slow down!

You can use Auditing to see if the originating change is coming from the SYSTEM account and not an end user. Be careful here – auditing can be expensive for performance. Also make sure that you are looking at the original change, not the downstream replication change result (which will always come from SYSTEM, since that’s the account running the DFSR service).

There are only a couple things you can do about this if you find that your AV/Backup software filter drivers are at fault:

- Don’t scan your Replicated Folders (not a recommended option except for troubleshooting your slow performance).

- Take a hard line with your vendor about getting this fixed for that particular version. They have often done so in the past, but issues can creep back in over time and newer versions.

9. File Server Resource Manager (FSRM) configured with quotas/screens that block

replication.

So insidious! FSRM is another component that shipped with R2 that can be used to block file types from being copied to a server, or limit the quantity of files. It has no real tie-in to DFSR though, so it’s possible to configure DFSR to replicate all files and FSRM to prevent certain files from being replicated in. Since DFSR keeps retrying, it can lead to backlogs and situations where too much time is spent retrying backlogged files that can never move and slowing up files that could move as a consequence.

When this is happening, debug logs (%systemroot%\debug\dfsr*.*) will show entries like:

20070605 09:33:36.440 5456 MEET 1243 <Meet::Install> -> WAIT Error processing update. updateName:teenagersfrommars.mp3 uid:{3806F08C-5D57-41E9-85FF-99924DD0438F}-v333459

gvsn:{3806F08C-5D57-41E9-85FF-99924DD0438F}-v333459

connId:{6040D1AC-184D-49DF-8464-35F43218DB78} csName:Users

csId:{C86E5BCE-7EBF-4F89-8D1D-387EDAE33002} code:5 Error:

+ [Error:5(0x5) <Meet::InstallRename> meet.cpp:2244 5456 W66 Access is denied.]

Here we can see that teenagersfrommars.mp3 is supposed to be replicated in, but it failed with an Access Denied. If we run the following from CMD on that server:

filescrn.exe screen list

We see that…

File screens on machine 2003SRV17:

File Screen Path: C:\sharedrf

Source Template: Block Audio and Video Files (Matches template)

File Groups: Audio and Video Files (Block)

Notifications: E-mail, Event Log

… someone has configured FSRM using the default Audio/Video template which blocks MP3 files and it happens to be against our c:\sharedrf folder we are replicating. To fix this we can do one or more of the following:

- Make the DFSR filters match the FSRM filters

- Delete any files that cannot be replicated due to the FSRM rules.

- Prevent FSRM from actually blocking by switching it from "Active Screening" to “Passive Screening” by using its snap-in. This will generate events and email warnings to the administrator, but not prevent the files from being moved in.

10. Un-staged or improperly pre-staged data leading to slow initial replication.

Wake up, this is the last one!

Sometimes replication is only slow in the initial sync phase. This can have a number of causes:

- Users are modifying files while initial replication is going on – ideally, you should set up your replication over a change control window like a weekend or overnight.

- You don’t have the latest DFSR.EXE from #2 above.

- You have not pre-staged data, or you’ve done it in a way that actually alters the files, forcing the most of or the entire file to replicate initially.

Here are the recommendations for pre-staging data that will give you the best bang for your buck, so that initial sync flies by and replication can start doing its real day-to-day job:

(Make sure you have latest DFSR.EXE installed on all nodes before starting!)

- ROBOCOPY.EXE - works fine as long as you follow the rules in this blog post.

- XCOPY.EXE - Xcopy with the /X switch will copy the ACL correctly and not modify the files in any way.

- Windows Backup (NTBACKUP) - The Windows Backup tool by default will restore the ACLs correctly (unless you uncheck the Advanced Restore Option for Restore security setting, which is checked by default) and not modify the files in any way. [Ned - if using NTBACKUP, please examine guidance here]

I prefer NTBACKUP because it also compresses the data and is less synchronous than XCOPY or ROBOCOPY [Ned - see above]. Some people ask ‘why should I pre-stage, shouldn’t DFSR just take care of all this for me?’. The answer is yes and no: DFSR can handle this, but when you add in all the overhead of effectively every file being ‘modified’ in the database (they are new files as far as DFSR is concerned), a huge volume of data may lead to slow initial replication times. If you take all the heavy lifting out and let DFSR just maintain, things may go far faster for you.

As always, we welcome your comments and questions,

- Ned Pyle