How to train your maml – Publishing your work

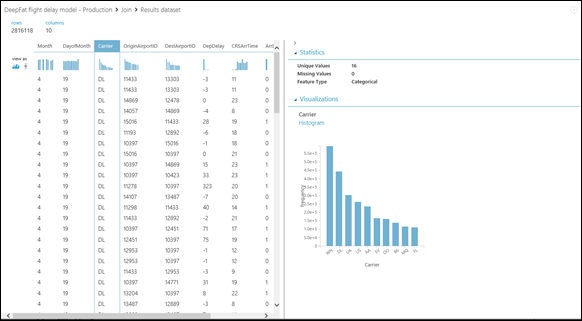

Before I get into my next post you may have noticed that ML has changed compared with a few weeks ago -the main page previews experiments differently, and the visualization of data has also changed..

One other problem hit me and that was some of the parts of my demo experiment didn’t work or ran really slowly – for example the Sweep Parameter module seems to be really slow now. I did ask about this and apparently it’s not been configured to run as a parallel process. So bear in mind that at the moment MAML is still in preview so it’s going to change and stuff might break. What’s important is that we feed back to the team so we all get the tool we need.

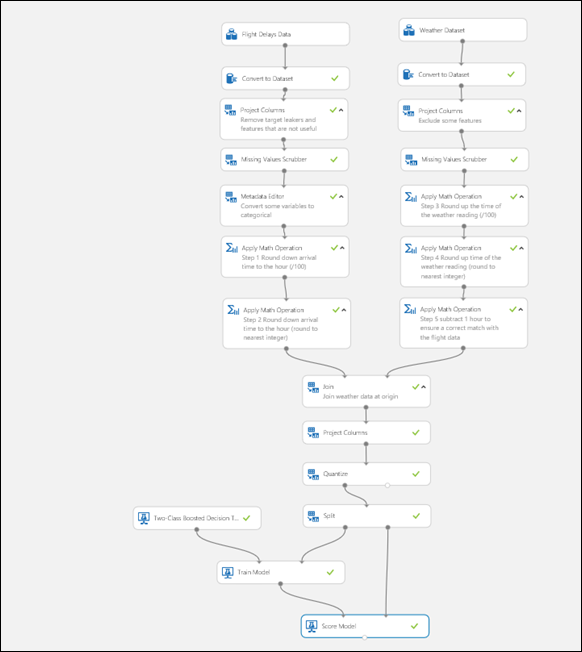

What I want to show in this post is how we can publish the work we have done so far, and because of the time it takes to do a sweep parameters module at the moment I am going to suggest we swap that module out for the train module as my experiment takes 4 mins to run with train compared to 5 hours with sweep! as well as doing this we can now remove the less accurate algorithm (two class logistic regression) and just leave this

such that the train module is set to train against the column Arrdel15 (whether or not the flight is delayed) .

Now we’re ready to publish our experiment and share it with the world but what does that mean exactly?

MAML allows us to expose a trained experiment as a web service so that a transaction can be sent to it for analysis and a score returned to the calling application / web site etc. In our case we could then send an individual flight and relevant weather data and then get back the likelihood that the flight will be delayed. While this may not be a unique feature the ease with which it can be achieved does make the use of MAML even more compelling so lets see how it works..

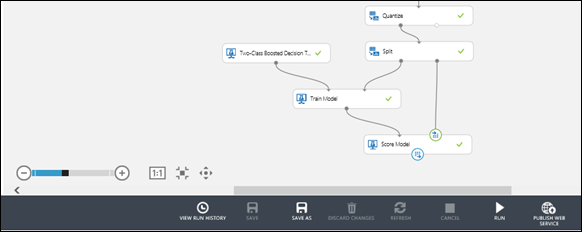

The first thing we need to do is to define the data inputs and outputs, by right clicking on the right and input and selecting Select as Publish Input and then on the output port Select as Publishing Output. This declares what data needs to be past in and what data we’ll get out of the model when it’s published. We just need to rerun the experiment again and now we’ll see that that option to publish a web service at the bottom of the screen is now enabled..

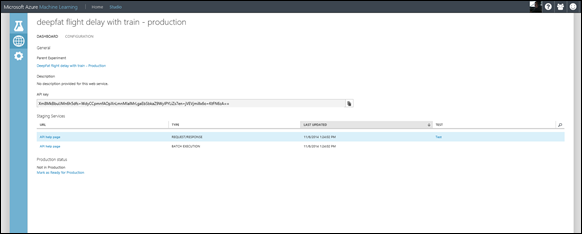

If we select this option our experiment is published as a draft and we get this screen..

If we click on the test option on the right we can see how this works as we’ll be presented with the input data we need to fill out in order to get a flight delay prediction back..

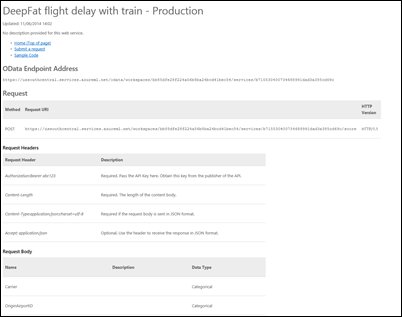

We can also see how to use the service either in batch or transaction by transaction by clicking on the appropriate API Help page link..

which gives the link and the format of the query we need to pass to our published service as well as links to sample code in C#, Python and R.

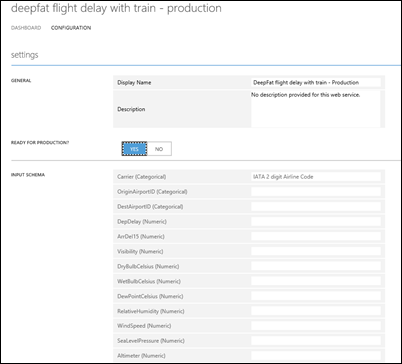

However our web service is not yet live in production, it’s just a draft. To put it into production we need to go back to the dashboard for the model and select the Mark as Ready for Production link. We can then add descriptions to the input fields and set the Ready for Production flag to Yes..

where I have properly described what the Carrier ID is and then clicked Save to confirm what I have done.

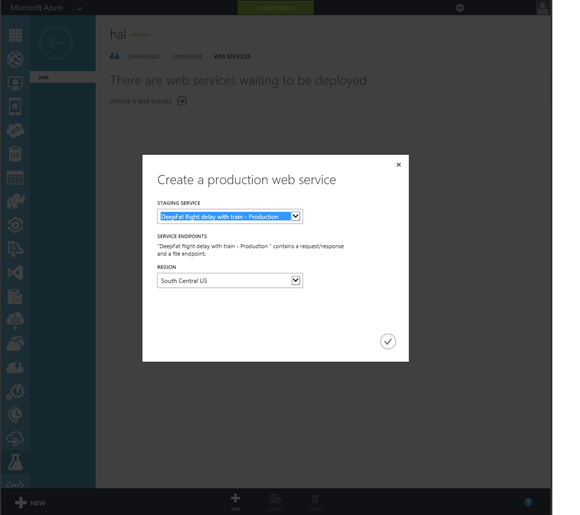

Having done all of that we now need to go back to the dashboard view and we’ll now get the option to deploy to Production and if we do that we’ll be taken back to the ML Workspace dashboard in the Azure portal where we can create a Production Web Service..

There is a reason for all of these steps and that is to separate out who does the publishing form the data science guys in a larger team. Once it’s live note that we can see if there are updates available. For example the model might be refined and then marked for publishing again as it is continually refined by the team who create and train experiments.

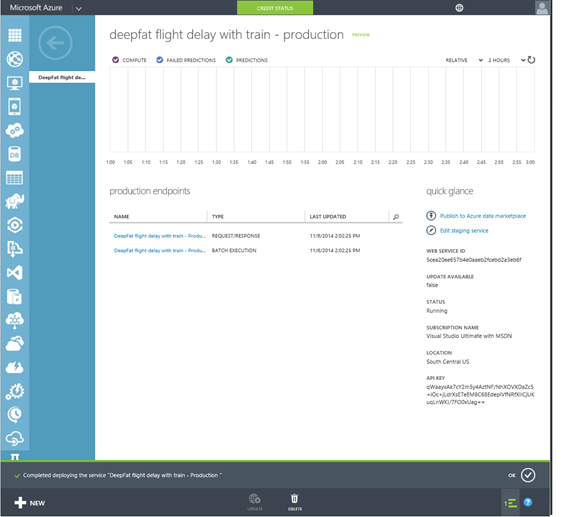

Now that our web site is in production we get a dashboard to show how it’s being used that’s very similar to other services in Azure like virtual machines and SQL databases..

So that’s a quick run through of an end to end experiment in Azure ML which I hope has aroused your curiosity, however you might be wondering how much this stuff costs and that’s another recent change to the service there is a free standard version that lets you work with data sets of up to 10Gb on a single node server and publish a staging web api just I have done here. Above that is a standard edition which does incur costs which are detailed here. Essentially you pay for every hour you are running experiments and then when you publish you pay per prediction hour and per thousand transactions (currently these are discounted while the service is in preview and are 48p per prediction hour and 12p per thousand predictions).

Couple those prices with the ease of use of the service and I think that MAML will take off even if it existed in isolation. However there are other data services on Azure that have been recently announced like Near Real Time analytics, Storm on HD Insight, Cloudera and Data Factory which I would assert means that Azure is the best place to derive insight from data which by definition is data science.