Virtualisation for the DBA part 3 – SQL Server Performance

As I have said already in this series the major concern most DBA’s have when virtualising SQL Server is performance. I think this is actually a bit of myth for a number reasons:

- Virtualisation just keeps getting better. This is down to a partnership between the hardware and software industries. We have had a couple of generations of CPU from AMD & intel with specific support for virtualisation and Microsoft’s Hyper-v virtualisation offering will only work on hardware with these CPU’s in.

- Most Servers aren’t that busy. Typically an average server in a data centre is only under 10-20% load most of the time, even if it is running SQL Server.

- Performance is not properly measured. In order for you to understand how much performance you are loosing in a virtual world you need to measure how well the system is performing now. In older versions of SQL Server this either means you have invested money to buy 3rd party tools to do this or you have invested your time to write your own.

There are several things in SQL Server 2008 to help with these issues:

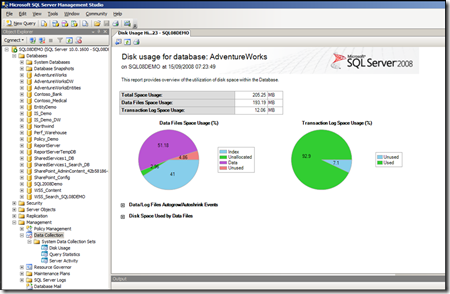

- Data Collection aka Performance Warehouse, allow you to quickly setup a pre-defined data warehouse on a designated SQL Server instance and then collect telemetry from other servers/instances at periodic intervals to see how they are performing via a suite of custom reports ..

The clever thing about these reports is that they are all linked allowing you to drill down to a particular query that might be running slowly or blocking other queries. The really clever thing is that it only take about a dozen mouse click to set all this up and leave it running. The only potential downside is that this only works and monitors on SQL Server 2008 instances.

- Data Compression . Given that the first thing that slows down a database is IO, compression can be useful in getting more data of the disk per read for a little bit of CPU overhead. There are 2 parts to this one compresses the fixed width fields e.g. decimal, and the date and time data types and stores them so that they only take up the space used i.e. they behave like var(char) as opposed to char. You can then elect to further compress each page in a table filegroup etc. and this works by identifying recurring values and storing that in the header of the page and replacing it with a token for each value in the column. One thing to note is that is an enterprise edition feature.

As I have mentioned before how you use resources when virtualisation is also important, and the most important of these is disk usage…

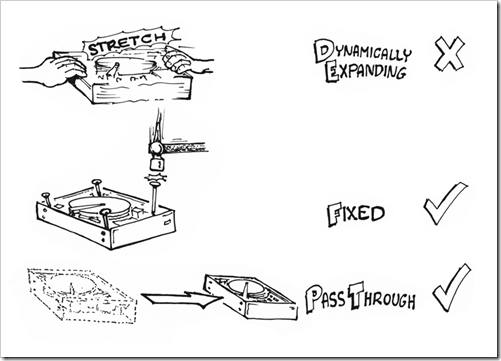

Dynamic disks should only be used for dev work and for testing where performance is not being measured. Fixed disks perform nearly as well as pass-through disks (i.e. where the database itself is kept on a physical disk or LUN in a SAN).

Although it might seem that Microsoft has vast resources at its disposal it is not immune to the current recession and nor did it get where it is today by wasting money, and so it has an ongoing project to virtualise SQL Server as part of its Green IT strategy (details here).

So hopefully we can discount performance as a reason not to virtualise unless your demands exceed the capability of your virtual platform to support what you need. In the case of Hyper-V this would be 4 virtual processors (think of that as a four core cpu) and 64 Gb of memory (in Windows Server 2008 R2).

For further reading on SQL Server performance on Hyper-V see this whitepaper from the SQL Customer Advisory Team (SQLCAT).

Technorati Tags: SQL Server 2008,Performance,virtualisation,Hyper-V