After Migrating Windows Server 2008 R2 Hyper-V Cluster to Windows Server 2012 R2 Hyper-V Cluster, Virtual Machines Fail to Come Online in Failover Cluster Manager

Lots of my customers are upgrading their Windows Server 2008 R2 Hyper-V Cluster nodes to Windows Server 2012 R2. The increased stability and reliability that Windows Server 2012 R2 combined with the news that Windows Server 2016 will support rolling upgrades from Windows Server 2012 R2 Cluster nodes makes this a no brainer.

The migration is fairly easy and straight forward. It’s a piece of cake really, especially if you’re reusing the same hardware. Most of my customers don’t have any issues at all.

That is why I was surprised when one of my customers ran into issues with what seemed to be random virtual machines failing to come online after being migrated to their new Windows Server 2012 R2 Hyper-V Cluster.

My customer is specifically using the Copy Cluster Roles to migrate from their Windows Server 2008 R2 Hyper-V Clusters to Windows Server 2012 R2.

When they run the Copy Cluster Roles completed successfully. Everything was green. They map the virtual machines to the new Hyper-V host switch they created and everything looks great…No errors at all…That is until they attempt to bring them online in Failover Cluster Manager and they fail to come online.

Not exactly what you want to see at 12:30am when you only have a 2-hour maintenance window.

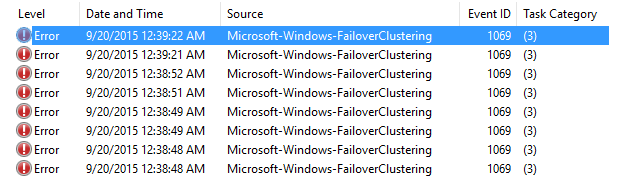

So what do you do then? We dived into the System Event Logs to see why the VMs were failing to come online. Of course we see a whole slew of Failover Cluster Event ID: 1069 events.

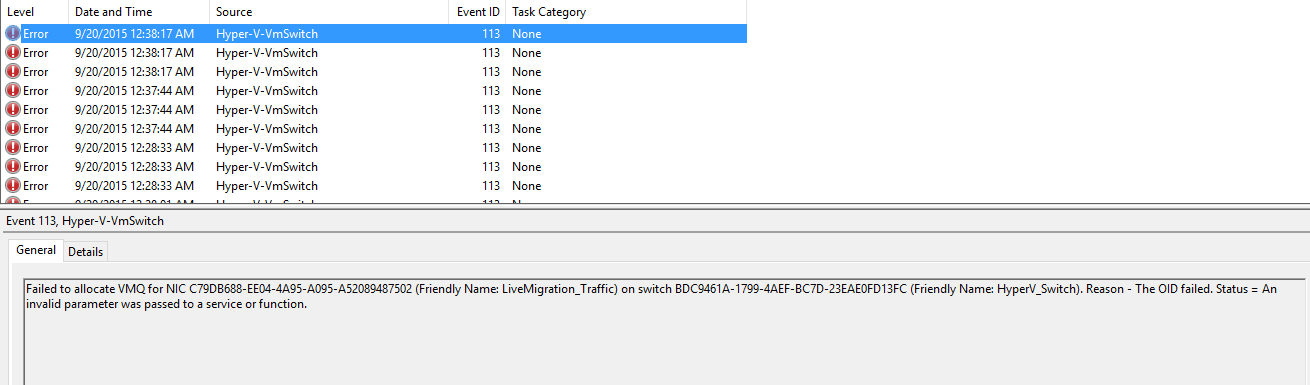

But as we scrolled back in time, we started to see these. Ahhh. Our first clue.

Log Name: System

Source: Microsoft-Windows-Hyper-V-VmSwitch

Date: 9/20/2015 12:38:17 AM

Event ID: 113

Task Category: None

Level: Error

Keywords:

User: SYSTEM

Computer: <edited out>.<edited out>.com

Description:

Failed to allocate VMQ for NIC C79DB688-EE04-4A95-A095-A52089487502 (Friendly Name: LiveMigration_Traffic) on switch BDC9461A-1799-4AEF-BC7D-23EAE0FD13FC (Friendly Name: HyperV_Switch). Reason - The OID failed. Status = An invalid parameter was passed to a service or function.

Hmm. So what does this mean? Well, you can tell we changed the switch name from LiveMigration_Traffic to HyperV_Switch, but that shouldn’t matter.

To investigate, we jumped into the Hyper-V Management Console to see what was going on. We saw many VMs listed there, but the one we were looking for was gone.

The VM wasn’t there. It vanished into thin air.

Do we have the right name? We consulted the 1069 Cluster error. Yep.

Does it still exist on the CSV? Yes.

So we looked at another VM that was failing to come line. It too was missing in Hyper-V.

What’s going on here???

Well, this isn’t a really good reason to panic. One of the things you may or may not know is that the Copy Cluster Roles Wizard copies settings and configuration by grabbing the appropriate registry data from the old cluster. During this process, it doesn’t register the virtual machines themselves with the virtual machine management service (VMMS) on the specific hosts as we may or may not have access to the cluster storage at this point. Remember: The Copy Cluster Roles Wizard grabs your cluster settings and configuration, but it does not copy over any data. That means the configuration files, the VHD/VHDX files, etc. have to be migrated manually. This typically means remapping the LUNs to the new cluster or otherwise copying/mirroring/migrating the data to the cluster storage you’ll be using with the new cluster. Make sense? When we online the VMs for the first time, we then register the virtual machines with the VMMS service. If you visit Hyper-V on the new cluster server right after the Copy Cluster Roles Wizard and prior to bringing the VMs online in Failover Cluster Manager, you might not see any VMs as we haven’t registered them yet. But in our case, we were seeing lots of VMs. Just not these ones that were failing.

We attempted the Copy Cluster Roles Wizard again. All green again. No errors.

At this point, I’m at a loss. We’re about to initiate our rollback plan to bring everything online and give ourselves additional time to investigate. But before then, we turn to Bing as a last ditch effort and eventually run across this gem.

https://consultingtales.com/2013/02/11/hyper-v-2012-vm-error-after-cluster-migration/

Similar…He doesn’t note any switch errors though. Could this be the same? We right-clicked on the VMs in Failover Cluster Manager (and running really short on time at this point), remove them all from Failover Cluster.

We switched to the Hyper-V management console and our missing VMs are BACK!

Great! We go to start them. Fail. Just like in the blog above.

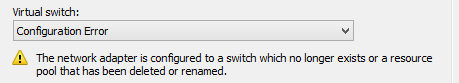

At this point, I’m asking myself, why didn’t the Copy Cluster Roles Wizard update this VMs switch? We click on the drop down, select our switch, and BAM! The VM comes online. We check some of the other VMs and their switch was correctly updated. What’s special about these ones?

Right in front of us in the settings for one of the “bad” VMs was the answer.

These VMs were using emulated NICs and not the synthetic NICs.

No idea why. Customer did not know either.

Our next migration, we tested that theory.

Beforehand, we exported the network configuration for the VMs by running the following command:

Get-VMNetworkAdapter * | export-csv C:\Migration\VMNetworks.csv

It gives us more data than we need, but we can quickly sort by the Name column for the remaining clusters as see we have over 100 VMs running what’s called “legacy” NICs. These are our emulated NICs.

At time of migration, we repeated our migration process and ran into the exact same issue.

So it’s definitely the emulated “legacy” network cards.

It turns out the Copy Cluster Roles Wizard does not handle migrating between clusters with different switch names when the virtual machine also contains a legacy network card.

Our options at this point?

Configure the VMs to use a synthetic NIC prior to migration

Continue with our workaround

My customer opted for Option #2. Why? Changing from an emulated legacy network adapter to a synthetic network adapter requires you to shutdown each VM and add a new network adapter to each VM. You cannot convert the existing network adapter. This means an outage and it’s not a process that is easily scriptable. Additionally, we did not know why these VMs were using the emulated legacy NICs. Therefore, my customer elected to go with Option #2 and clean these up during a future outage.

The steps are documented here: https://technet.microsoft.com/en-us/library/ee941153(v=ws.10).aspx

Aside from the Copy Cluster Roles Wizard not working correctly when migrating with legacy NICs, why else would we want to get them out of our environment?

According to https://technet.microsoft.com/en-us/library/cc770380.aspx :

“A legacy network adapter works without installing a virtual machine driver. The legacy network adapter emulates a physical network adapter, multiport DEC 21140 10/100TX 100 MB. A legacy network adapter also supports network-based installations because it includes the ability to boot to the Pre-Execution Environment (PXE boot).”

So in short, but using a legacy network adapter, you’re potentially taking a performance hit. Additionally, in Windows Server 2012 and later, Generation 2 virtual machines support PXE booting with the standard synthetic network adapter.

For more on Hyper-V Failover Cluster Migrations, make sure to check out one of my earlier posts here: https://blogs.technet.com/b/askpfeplat/archive/2015/11/02/from-windows-server-2008-r2-to-windows-server-2012-r2-a-tale-of-a-successful-failover-cluster-hyper-v-migration.aspx

Thanks for reading!

Charity “keep calm and migrate on” Shelbourne